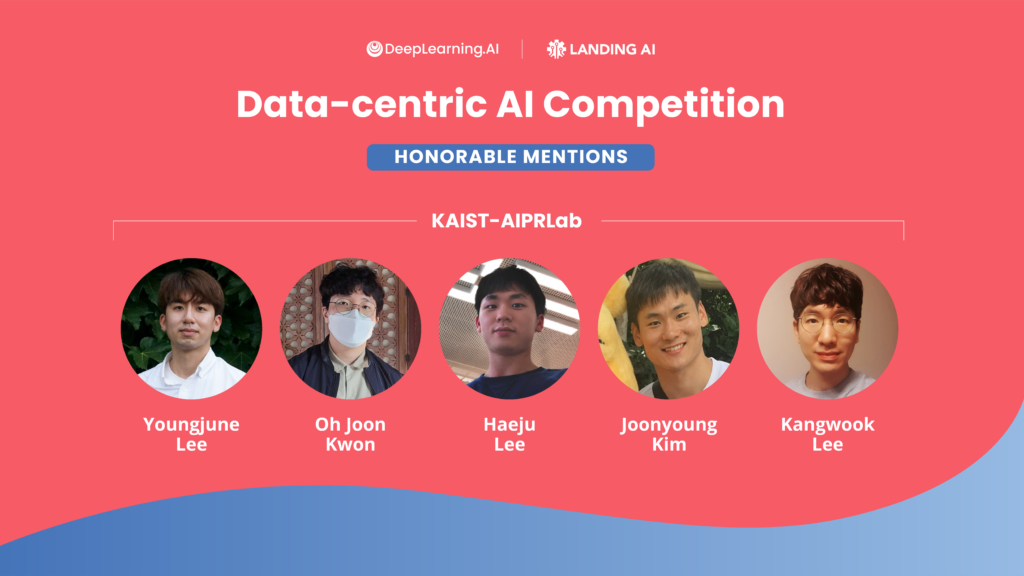

How We Won the First Data-Centric AI Competition: KAIST – AIPRLab

In this blog post, KAIST-AIPRLab, one of the winners of the Data-Centric AI Competition, describes techniques and strategies that led to victory. Participants received a fixed model architecture and a dataset of 1,500 handwritten Roman numerals. Their task was to optimize model performance solely by improving the dataset and dividing it into training and validation sets. The dataset size was capped at 10,000. You can find more details about the competition here.

# Your personal journey in AI

- Why did you decide to learn AI?

Our team members are interested in various aspects of machine learning as we come from different undergraduate backgrounds. We are especially interested in the practicality of AI as we are witnessing drastic changes that are taking place before our eyes. However, we are also concerned with double-edged aspects of AI, including room for explainability, computational efficiency, and social bias. We believe that every practical application should be backed by robust theory, thus we decided to delve deeper into AI.

- Why did you decide to join the lab?

We come from a variety of undergraduate backgrounds such as math, computer science, and electronics engineering and have diverse interests in different domains of machine learning. Nevertheless, we are all interested in the common idea and theory behind these different domains; how machines think and make decisions. Our lab is supervised by Prof. Kee-Eung Kim, who has been working in the area of reinforcement learning, or more generally, models and algorithms for decision theoretic problems. As such, joining Prof. Kim’s lab was a natural choice.

# Why did you decide to participate in the competition?

We first heard about the competition from our advisor Prof. Kee-Eung Kim, who encouraged us to participate. We all thought the premise of the competition was interesting since it nicely captures the practical question we face when we embark on any AI project: how much data is sufficient and how do we collect it? We also wanted to see whether we could identify a concrete thesis topic from the challenges we had to deal with from the competition.

- What were the challenges and what have you learned through competition?

At the beginning of the competition, our advisor introduced us to papers on data-centric methods, such as techniques for data valuation and learning from noisy data. We also searched for potential papers along this direction that could be applied to the competition setting. However, we struggled because most of these algorithms were not entirely effective, a typical theory-practice gap. We also sought advice from members from Samsung Research, who had rich and practical experience in AI projects involving real-world, scarce and noisy datasets. Through trial and error, we learned that it was important to find a simple yet creative method rather than hoping that the latest method would magically solve the problem.

# The techniques you used

We wanted to take a more fundamental algorithmic approach that can be generalized to different data domains. Moreover, we restricted ourselves to use the information only provided in the competition; that is, we do not use any external data sources including pretrained models. We created a pipelined method that can be put into the following steps: 1. Data Valuation, 2. Auto Augmentation, 3. Cleansing with a Contrastively Learned Model, 4. Edge Case Augmentation, and 5. Cleansing.

- Data Valuation

We began with the idea that deep learning models are optimized with gradient descent. A commonly used analogy compares this procedure to a hiker walking down a valley with milestones along the way. However, the hiker can get lost if the provided milestones are misleading. Similar problems can occur in deep learning as well, where the model is trained on misleading examples. Hence, we would need to remove such train data points. Our initial experiments with data valuation with reinforcement learning [1] turned out to be ineffective for an extremely noisy dataset.

We can take a peek at how a training data point influences the model’s prediction on a validation data point by the method of influence function [2]. The basic idea behind this is “upweighting” a particular training point by a small amount and see how this affects the model prediction at inference time. We incorporated HyDRA [3,] which uses the gradient of validation loss (also called hypergradient) to efficiently approximate the local influence of a training point on the model optimization trajectory. From there, we deleted training data points with negative influence.

However, this is by no means a perfect algorithm to cleanse all noisy points from the dataset because our validation set was too small. Our experiments showed that the algorithm could remove the majority of noisy data, yet this method could still remove semantically meaningful images or do not remove absolutely irrelevant images that were mixed into the training dataset. Hence, we applied additional cleansing operations before which we proceeded with augmentation.

- Auto Augmentation

Augmentation is a common technique in computer vision among other domains to create more training samples and to build a more robust model. However, finding a suitable set of transforms for augmentation often involves manual labor and is therefore time consuming. Even though the competition dataset was small and simple enough to find decent augmentation policies by hand, we resorted to auto augmentation to reduce human intervention.

These auto augmentation algorithms look for the best augmentation policies after receiving feedback on validation data. Among many proposed methods, we used Faster Auto Augment [4], which considerably reduces search time by making the algorithm fully differentiable. In order to preserve as much information, we applied augmentations on 64 x 64 images. Additionally, we balanced the number of samples in each class to solve the class imbalance.

- Cleansing with a Contrastively Learned Model

Even after cleansing and augmentation, there was still noise in the data. Moreover, augmented images sometimes had wrong semantic labels. For instance, images for ‘iv’ could be translated too far to the right to become ‘i’. To remove such noise and fix the mislabeled data points, we used feature representations from a Siamese Network [5] that has been trained on the dataset obtained so far in a contrastive and supervised fashion.

We projected learned latent features of the Siamese network onto 2D space via t-SNE [6] and used visualizations to examine the clusters. We could identify the data points that were obviously mislabeled or meaningless from this visualization. Using the k-nearest neighbor distances and labels, we fixed the label if it was obviously a labeling error (all neighbors having the same but different label from the point of interest) or dropped the data points if it did not obviously belong to a cluster (the closest neighbor being far from point of interest or its neighbors being only in a certain direction from the data point).

- Edge Case Augmentation

There still existed some edge cases that persisted through these procedures due to their small proportions. Hence, we needed to identify and augment these edge case samples. We decided to use the representation of the model trained with data upto this point. We projected the latent features onto 2D space via t-SNE as we have done in the previous step. We could identify data points that obviously belonged to a cluster but far from the closest same-class neighbor as edge-case data points. We augmented such isolated data points in each cluster.

- Cleansing

Finally, we cleaned the dataset once more in the same way as step 3 after further edge case augmentations. After this pipeline, we could achieve 0.84711 accuracy on the test dataset. We will continue to evolve and simplify the approach to work in domains where external data or pretrained models are not readily available.

References

[1] Data valuation using reinforcement learning. ICML. 2020

[2] Understanding Black-box Predictions via Influence Functions. ICML. 2017

[3] Hydra: Hypergradient data relevance analysis for interpreting deep neural networks. AAAI. 2021

[4] Faster AutoAugment: Learning Augmentation Strategies using Backpropagation. ECCV. 2020

[5] We modified https://keras.io/examples/vision/siamese_contrastive/

[6] Visualizing data using t-sne. Journal of machine learning research. 2008

# Your advice for other learners

Because each of the team members had different perspectives, we were able to come up with different ideas while working together. These diverse approaches have been the driving force behind the development of our method and yielded good results. We hope that you will always accept the diverse perspectives around you and share your opinions with others.

—-

If you have any questions about our method or anything, you can contact Youngjune Lee (yjlee511@gmail.com), and Oh Joon Kwon (ojkwon@ai.kaist.ac.kr)