Working AI: In the Lab with NLP PhD Student Abigail See

How did you get started in AI?

I grew up in Cambridge, UK and was very interested in maths when I was a kid – I loved doing maths olympiads – and eventually ended up studying pure mathematics at Cambridge University for my undergrad degree. Though maths was interesting, I wanted to work on something more connected to the real world – this is what drew me to CS. Fortunately, Cambridge (sometimes known as “Silicon Fen”) also has a great tech scene, including a Microsoft Research branch, so I started branching into CS through internships.

I started Stanford’s CS PhD program in 2015 with only the vaguest notion of what I wanted to do. But once I was here, it quickly became apparent that AI, in particular deep learning, was the most exciting thing to study. I chose natural language processing (NLP) because I have a special interest in communication; it’s a vital skill but difficult even for humans. So making computers good communicators is important and challenging.

What are you currently working on?

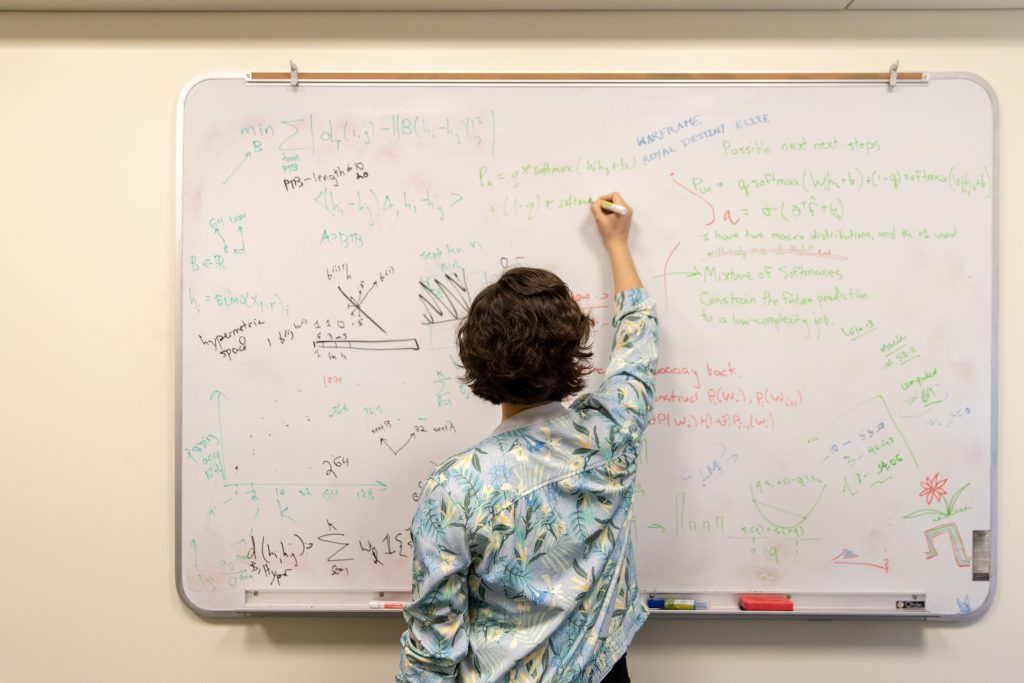

My research focuses on developing better methods for natural language generation using deep learning. The neural methods that have been successful for tasks like machine translation fail for more open-ended tasks like storytelling and chit-chat dialogue; this is where I aim to make progress. Recently I’ve been working on controlling chatbots to be better conversationalists, getting neural language models to think further ahead, and better ways to generate coherent stories.

Over the last year, I’ve worked on teaching as much as research. With my advisor Chris Manning, I’m the co-instructor of CS224n, Stanford’s flagship class on NLP and deep learning. Teaching the class is a huge challenge: The field is actively evolving as we teach the class, so each year we have to renew a large proportion of the syllabus and materials in order to reflect current best practices. For example, this year we replaced our 2017-developed content with all-new assignments and lectures to reflect the state of NLP and deep learning in 2019. All this must be done at scale, catering not only to our 450 Stanford students, but also a large international audience of interested students.

Want to build your own career in deep learning?

Get started by taking the Deep Learning Specialization!

Take us through your typical workday.

Most days I have some kind of meeting with research collaborators, where we’ll discuss ideas and results, and strategize what we should do next. I much prefer working with other people to working alone; research can be a struggle, and collaborators keep you going. I also try to attend seminars and reading groups to make sure I’m exposing my mind to new ideas.

If I’m preparing to give an upcoming CS224n lecture, I’ll spend as much time as possible researching the content, preparing slides, and practicing. Though lecturing is highly visible, it’s only the tip of the iceberg of the work that goes into the class. We have an amazing team of 20 TAs who work on everything from assignment development to subtitling the lecture videos. Overseeing this team is my primary job right now – it feels a lot like plate-spinning, as there’s always something that needs my attention.

To de-stress, I try to do something creative a few times a week – currently, I’m in a dance team, and I’m learning jazz piano.

What tech stack do you use?

As a mathematician, my tech stack used to be pen and paper. These days, I like to use:

- Atom for coding: I prefer a GUI-based text editor – I might never learn Vim, and I’m OK with that! Aside from coding, I write everything in my life (reading notes, to-do lists, paper outlines, etc.) as hierarchically indented lists, usually in Atom.

- Pytorch for deep learning: Like a lot of NLP researchers, I switched from TensorFlow to PyTorch about two years ago, due to the latter’s relative ease of prototyping and debugging. However, I still use and love TensorBoard for visualization.

- Overleaf for writing LaTeX documents: LaTeX is difficult enough without having to worry about package management and version control! I love that Overleaf makes these things easier.

- BetterTouchTool: One thing I can’t live without is snap-to-left / snap-to-right keyboard shortcuts for Mac.

What did you do before working in AI and how does it factor into your work now?

Shifting from pure maths to deep learning is an odd transition – from something so rigorous to something so empirical. I appreciate my pure maths training because it enables me to wade through equations and proofs (if not easily, then at least without fear).

Outside of science, I’ve always had an interest in art, literature and film, and how they relate to wider society. These interests are reflected by my research into more creative NLP tasks like conversational language and storytelling. I also try to stay mindful of AI’s wider role in society; for example, last year I ran AI Salon, a big-picture discussion series on AI.

How do you keep learning? How do you keep up on recent AI trends?

I remind myself to talk to people. The best thing about working in the Stanford NLP group is that I get to be surrounded by amazing people. Informal conversations are sometimes quicker and more educational than reading lots of papers alone.

Secondly, I try to be unafraid to ask (stupid) questions. This removes roadblocks much more quickly, and as a bonus, I hope I’m helping to create a work environment in which everyone feels more comfortable asking questions.

I’ve also found that teaching is a great way to keep learning. Teaching CS224n has required me to firm up my understanding of various topics to a much higher standard than I had previously.

To keep up with latest AI news, I use Twitter. I follow lots of NLP professors such as Kyunghyun Cho, Miles Brundage, Mark Riedl, and Emily Bender, plus people who provide interesting meta-commentary on the field such as Sebastian Ruder, Stephen Merity and Zachary Lipton. I also follow people who are innovating accessible CS education, such as Rachel Thomas, Jeremy Howard, and Cynthia Lee, and those working on ethics in AI, such as Kate Crawford, Joy Buolamwini, and Timnit Gebru.

What AI research are you most excited about?

I’m excited about research that aims to get discrete structure into neural networks (which by contrast generally have more continuous representations). This is an important development for enabling neural networks to do things like reasoning. Another exciting trend is the development of better task-independent pre-training (such as ELMo and BERT) for NLP deep learning. I hope this will make it easier to build systems for difficult or niche NLP tasks without reinventing the wheel every time.

What advice do you have for people trying to break into AI?

My first piece of advice is that anyone can learn AI. There are some people who say that to work in AI, you must have a degree in a particular subject, have attended a particular university, or have a certain amount of coding experience. I think this is an unnecessarily narrow mindset; there are many paths to AI. For example, when I started my PhD, I barely knew how to code. AI is a rapidly evolving field with applications everywhere. People from all disciplines and all backgrounds can and should contribute to AI; we need them to make AI the best it can be.

My second piece of advice is that learning AI isn’t one-size-fits-all. Some people want a more theoretical understanding, and others want more practical knowledge. I’m glad to see that there are increasingly many high-quality resources out there for learning AI, many of them free. Check them out and figure out how to make AI relevant to you.

Abigail See is a PhD student in the Stanford NLP Group. You can follow her on Twitter at @abigail_e_see.