Dear friends,

In the last couple of days, Google announced a doubling of Gemini Pro 1.5's input context window from 1 million to 2 million tokens, and OpenAI released GPT-4o, which generates tokens 2x faster and 50% cheaper than GPT-4 Turbo and natively accepts and generates multimodal tokens. I view these developments as the latest in an 18-month trend. Given the improvements we've seen, best practices for developers have changed as well.

Since the launch of ChatGPT in November 2022, with key milestones that include the releases of GPT-4, Gemini 1.5 Pro, Claude 3 Opus, and Llama 3-70B, many model providers have improved their capabilities in two important ways: (i) reasoning, which allows LLMs to think through complex concepts and and follow complex instructions; and (ii) longer input context windows.

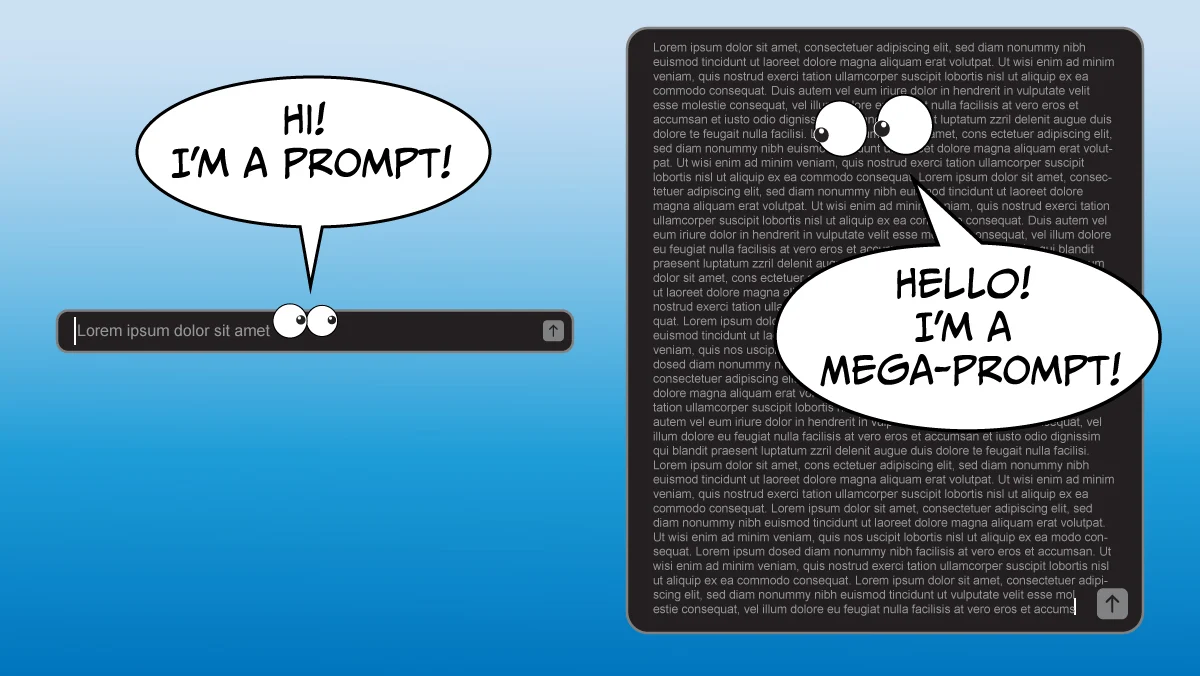

The reasoning capability of GPT-4 and other advanced models makes them quite good at interpreting complex prompts with detailed instructions. Many people are used to dashing off a quick, 1- to 2-sentence query to an LLM. In contrast, when building applications, I see sophisticated teams frequently writing prompts that might be 1 to 2 pages long (my teams call them “mega-prompts”) that provide complex instructions to specify in detail how we’d like an LLM to perform a task. I still see teams not going far enough in terms of writing detailed instructions. For an example of a moderately lengthy prompt, check out Claude 3’s system prompt. It’s detailed and gives clear guidance on how Claude should behave.

This is a very different style of prompting than we typically use with LLMs’ web user interfaces, where we might dash off a quick query and, if the response is unsatisfactory, clarify what we want through repeated conversational turns with the chatbot.

Further, the increasing length of input context windows has added another technique to the developer’s toolkit. GPT-3 kicked off a lot of research on few-shot in-context learning. For example, if you’re using an LLM for text classification, you might give a handful — say 1 to 5 examples — of text snippets and their class labels, so that it can use those examples to generalize to additional texts. However, with longer input context windows — GPT-4o accepts 128,000 input tokens, Claude 3 Opus 200,000 tokens, and Gemini 1.5 Pro 1 million tokens (2 million just announced in a limited preview) — LLMs aren’t limited to a handful of examples. With many-shot learning, developers can give dozens, even hundreds of examples in the prompt, and this works better than few-shot learning.

When building complex workflows, I see developers getting good results with this process:

- Write quick, simple prompts and see how it does.

- Based on where the output falls short, flesh out the prompt iteratively. This often leads to a longer, more detailed, prompt, perhaps even a mega-prompt.

- If that’s still insufficient, consider few-shot or many-shot learning (if applicable) or, less frequently, fine-tuning.

- If that still doesn’t yield the results you need, break down the task into subtasks and apply an agentic workflow.

I hope a process like this will help you build applications more easily. If you’re interested in taking a deeper dive into prompting strategies, I recommend the Medprompt paper, which lays out a complex set of prompting strategies that can lead to very good results.

Keep learning!

Andrew

P.S. Two new short courses:

- “Multi AI Agent Systems with crewAI” taught by crewAI Founder and CEO João Moura: Learn to take a complex task and break it into subtasks for a team of specialized agents. You’ll learn how to design agent roles, goals, and tool sets, and decide how the agents collaborate (such as which agents can delegate to other agents). You'll see how a multi-agent system can carry out research, write an article, perform financial analysis, or plan an event. Architecting multi-agent systems requires a new mode of thinking that's more like managing a team than chatting with LLMs. Sign up here!

- “Building Multimodal Search and RAG” taught by Weaviate's Sebastian Witalec: In this course, you'll create RAG systems that reason over contextual information across text, images and video. You will learn how to train multimodal embedding models to map similar data to nearby vectors, so as to carry out semantic search across multiple modalities, and learn about visual instruction tuning to add image capabilities to large language models. Sign up here!