Dear friends,

I think AI agent workflows will drive massive AI progress this year — perhaps even more than the next generation of foundation models. This is an important trend, and I urge everyone who works in AI to pay attention to it.

Today, we mostly use LLMs in zero-shot mode, prompting a model to generate final output token by token without revising its work. This is akin to asking someone to compose an essay from start to finish, typing straight through with no backspacing allowed, and expecting a high-quality result. Despite the difficulty, LLMs do amazingly well at this task!

With an agent workflow, however, we can ask the LLM to iterate over a document many times. For example, it might take a sequence of steps such as:

- Plan an outline.

- Decide what, if any, web searches are needed to gather more information.

- Write a first draft.

- Read over the first draft to spot unjustified arguments or extraneous information.

- Revise the draft taking into account any weaknesses spotted.

- And so on.

This iterative process is critical for most human writers to write good text. With AI, such an iterative workflow yields much better results than writing in a single pass.

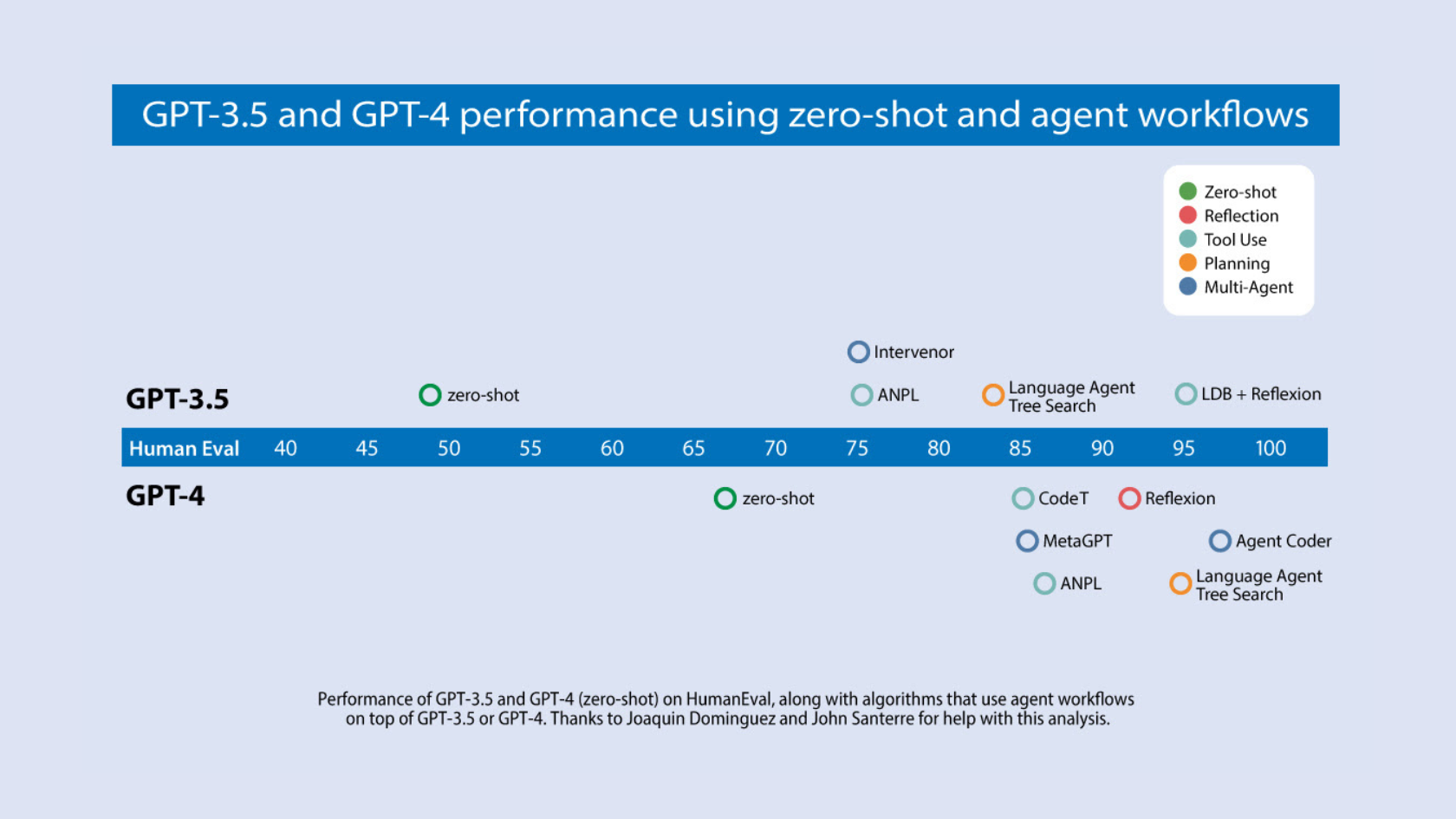

Devin’s splashy demo recently received a lot of social media buzz. My team has been closely following the evolution of AI that writes code. We analyzed results from a number of research teams, focusing on an algorithm’s ability to do well on the widely used HumanEval coding benchmark. You can see our findings in the diagram below.

GPT-3.5 (zero shot) was 48.1% correct. GPT-4 (zero shot) does better at 67.0%. However, the improvement from GPT-3.5 to GPT-4 is dwarfed by incorporating an iterative agent workflow. Indeed, wrapped in an agent loop, GPT-3.5 achieves up to 95.1%.

Open source agent tools and the academic literature on agents are proliferating, making this an exciting time but also a confusing one. To help put this work into perspective, I’d like to share a framework for categorizing design patterns for building agents. My team AI Fund is successfully using these patterns in many applications, and I hope you find them useful.

- Reflection: The LLM examines its own work to come up with ways to improve it.

- Tool Use: The LLM is given tools such as web search, code execution, or any other function to help it gather information, take action, or process data.

- Planning: The LLM comes up with, and executes, a multistep plan to achieve a goal (for example, writing an outline for an essay, then doing online research, then writing a draft, and so on).

- Multi-agent collaboration: More than one AI agent work together, splitting up tasks and discussing and debating ideas, to come up with better solutions than a single agent would.

Next week, I’ll elaborate on these design patterns and offer suggested readings for each.

Keep learning!

Andrew

Read "Agentic Design Patterns Part 2: Reflection"

Read "Agentic Design Patterns Part 3, Tool Use"

Read "Agentic Design Patterns Part 4: Planning"

Read "Agentic Design Patterns Part 5: Multi-Agent Collaboration"