Dear friends,

The population of Earth officially reached 8 billion this week. Hooray! It’s hard to imagine what so many people are up to. While I hope that humanity can learn how to leave only gentle footprints on the planet, I’m excited about the creativity and inventiveness that a growing human population can bring.

One measure of human progress is the dwindling percentage of people involved in agriculture. If a smaller fraction of the population can generate enough calories to feed everyone, more people will have time to build houses, care for the sick, create art, invent new technologies, and do other things that enrich human life.

Today, roughly 1.5 percent of U.S. jobs are in farming, which enables most of us here to pursue other tasks. Still, a lot of people are involved in various forms of routine, repetitive work. Just as the agricultural workforce fell over centuries from a majority of the population to a tiny minority, AI and automation can free up more people from repetitive work.

This is important because we need lots of people to work on the hard tasks ahead of us. For instance, deep learning could not have reached its current state without a large community building on one another’s work and pushing ideas forward. Building applications that will improve human lives requires even more people. Semiconductors are another example: Building a modern chip requires clever effort by many thousands of people, and building the breakthroughs that increase processing power and efficiency as Moore’s Law fades will take even more. I’d like to see a lot more people pushing science and technology forward to tackle problems in energy, health care, justice, climate change, and artificial general intelligence.

I love humanity. We must do better to minimize our environmental impact, but I’m happy that so many of us are here: more friends to make, more people to collaborate with, and more of us to build a richer society that benefits everyone!

Keep learning!

Andrew

DeepLearning.AI Exclusive

A Complete Guide to NLP

Recent advances in natural language processing (NLP) are driving huge growth in AI research, applications, and investment. Check out our new guide to this high-impact, fast-changing technology. Read it here

News

Does Price Optimization Hike Rents?

An algorithm that’s widely used to price property rentals may be helping to drive up rents in the United States.

What’s new: YieldStar, a price-prediction service offered by Texas-based analytics company RealPage, suggests rental rates that are often higher than the market average, ProPublica reported. Critics believe the software stifles competition, inflating prices beyond what many renters can afford and adding to an ongoing shortage of affordable housing.

How it works: The algorithm analyzes leases for over 13 million units across the U.S. to calculate prices for 20 million rental units daily. Five of the 10 largest property management firms in the U.S. use it. Former RealPage employees told the authors that property managers adopt around 90 percent of its suggested prices.

- The lease data, which is gathered from YieldStar customers, includes current rental price (which may differ from the rate that’s advertised publicly), number of bedrooms, number of units in a building, and number of nearby units likely to go on the market in the near future.

- The company advertised that YieldStar could help clients set prices between 3 and 7 percent higher than the market. It removed the information following the report by ProPublica.

- The cost to rent a one-bedroom apartment in a Seattle building with prices set by YieldStar increased 33 percent over one year, while the price of a nearby studio where rents were set manually increased 3.9 percent in the same time period.

Behind the news: Automated pricing has had mixed results in real estate. Offerpad, Opendoor, and Redfin use algorithms to estimate a home’s value, and their systems account for around 1 percent of U.S. sales. Zillow shuttered a similar program last year after it contributed to over $600 million in losses.

Yes, but: Experts in real estate and antitrust law say that RealPage’s products enable rival property managers to coordinate pricing, a potential violation of U.S. antitrust law. In addition to the pricing algorithm, the company hosts user groups where property managers share feedback.

- RealPage, responding to the report, asserted that it does not violate antitrust laws because its data is anonymized and aggregated.

- RealPage supporters told ProPublica that a shortage of housing is the real driver behind rising rents.

Why it matters: Advertised rental rates rose 17 percent between March of 2021 and 2022. Several factors contributed to the increase, but automated pricing tools like YieldStar could diminish tenants’ power to negotiate lower rents.

We’re thinking: YieldStar’s role in rising prices is unclear, but any automated system that has potential to manipulate markets at a large scale warrants regulatory oversight. There is precedent: In the 1990s, the U.S. Justice Department forced airlines to change a shared pricing algorithm after finding that they had overcharged travelers by $1 billion. The black-box nature of AI systems means that regulators will need new tools to oversee and audit potential AI-driven coordination of prices.

AI Hasn’t Been So Bad for Jobs

Worries that automation is stealing jobs may have been greatly exaggerated.

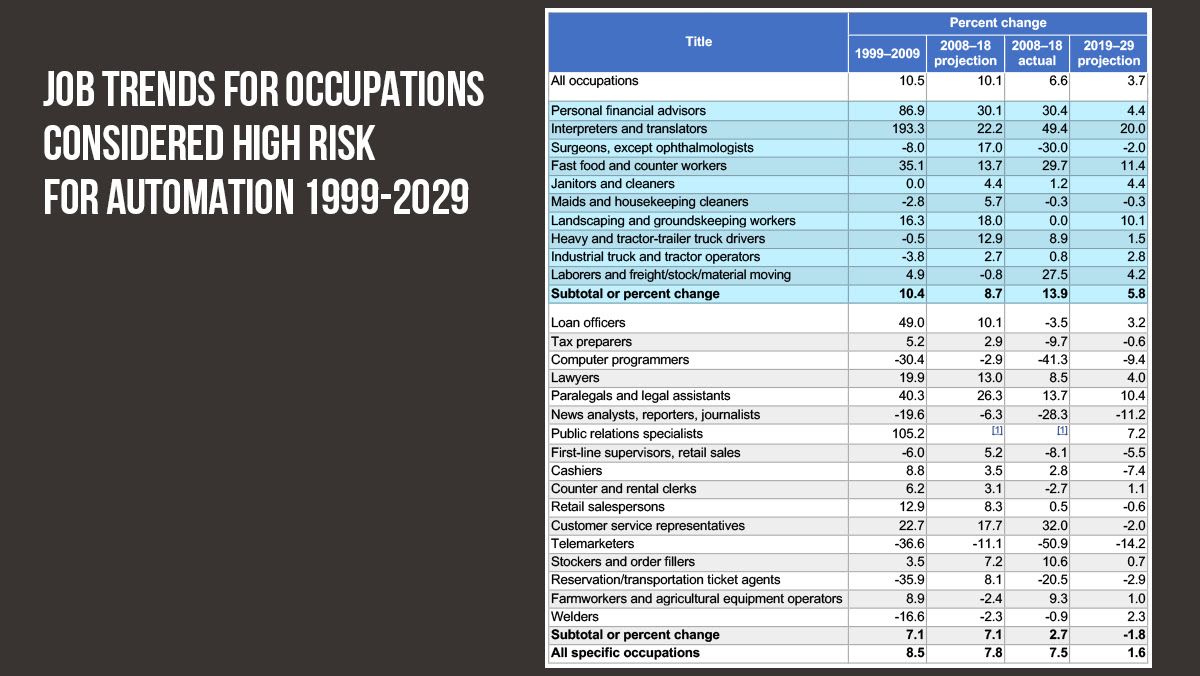

What’s new: A U.S. government report found that employment has increased in many occupations that may be threatened by automation.

Projected versus actual growth: Bureau of Labor Statistics sociologist Michael J. Handel identified 11 occupations at risk from AI. He calculated changes in employment between 2008 and 2018.

- The 11 professions grew by 13.9 percent, on average, during those years.

- Among the largest gains: Jobs for interpreters and translators grew 49.4 percent, positions for personal financial advisors increased 30.4 percent, fast food and counter work expanded 29.7 percent, and manual labor and freight jobs grew 27.5 percent.

- Only two of the 11 professions declined. Maids and housekeepers edged downward 0.2 percent, while surgeons outside of ophthalmology fell by 30 percent. Roomba and da Vinci notwithstanding, automation doesn’t seem to be implicated in those declines.

Looking forward: Handel’s 13.9 percent estimate is reasonably close to the 8.7 percent rate projected by the Bureau of Labor Statistics at the beginning of that period. The agency’s projection for 2019 to 2029 expects the same occupations to grow by a more leisurely 5.8 percent on average. Handel attributes most of the slowdown to an aging population.

Yes, but: The report cites occupations that suffered losses between 2008 and 2018 due, in part, to technologies other than AI: tax preparer (down by 9.7 percent), ticket agent (20.5 percent), and journalist (28.3 percent). And then there are telemarketers, who suffered a 50 percent decline in jobs as regulators clamped down on nuisance calls and — yes — now face challenges from AI systems.

Behind the news: This study joins previous research that runs counter to fears that robots will steal human jobs.

- Studies of the use of industrial machinery and employment rates in Finland, Japan, France, and the UK found that increased automation is associated with more jobs and higher productivity in industries with high rates of automation.

- Unemployment rates in most of the 38 countries that make up the Organization for Economic Co-Operation and Development were lower in April 2022 than February 2020, before the spread of Covid-19. Some economists fretted that the pandemic would drive a wave of job-killing automation.

Why it matters: A 2022 U.S. survey of 1,225 people by chat software developer Tidio found that 65 percent of respondents — including 69 percent of university graduates — feared that they soon would lose their jobs to automation. The new report could improve people’s trust in technology by showing how such worries have played out in recent years. It should spur thinking about how to integrate AI safely and productively into workplaces of all kinds.

We’re thinking: Automation has always been a part of industrialized societies, and its impacts can be substantial. (How many elevator operators do you know?) However, when it complements human work, it often leads to job growth that counters the losses — for example, the rise of automobiles led to lots of work for taxi drivers.

A MESSAGE FROM DEEPLEARNING.AI

Are you looking to land an AI job but not sure what steps to take? Learn how to chart your path during our upcoming panel discussion, “How to Build a Real-World AI Project Portfolio,” on November 29, 2022, at 9 a.m. Pacific Standard Time. RSVP

What the Missing Frames Showed

Neural networks can describe in words what’s happening in pictures and videos — but can they make sensible guesses about things that happened before or will happen afterward? Researchers probed this ability.

What’s new: Chen Liang at Zhejiang University and colleagues introduced a dataset and architecture, called Reasoner, that generates text descriptions of hidden, or masked, events in videos. They call this capability Visual Abductive Reasoning.

Key insight: To reason about an event in the past or future, it’s necessary to know about events that came before and/or after it, including their order and how far apart they were — what happened immediately before and/or after is most important, and more distant events add further context. A transformer typically encodes the positions of input tokens either one way (a token’s absolute position in the sequence of tokens) or the other (its pairwise distance from every other token), but not both. However, it’s possible to modify these positional encoding styles by producing an embedding for each pair of tokens that’s different from the inversion of each pair — for example, producing different embeddings for the pairs of positions (1,3) and (3,1). This approach captures both the order of events and their distance apart, making it possible to judge the relevance of any event to the events that surround it.

How it works: The authors trained an encoder and decoder. The training dataset included more than 8,600 clips of daily activities found on the web and television. Each clip depicted an average of four sequential events with text descriptions such as “a boy throws a frisbee out and his dog is running after it,” “the dog caught the frisbee back,” and “frisbee is in the boy’s hand.” The authors masked one event per clip. The task was to generate a description of each event in a clip including the masked one.

- The authors randomly sampled 50 frames per event and produced a representation of each frame using a pretrained ResNet. They masked selected events.

- The encoder, a vanilla transformer, collected the frame representations into visual representations. In addition to the self-attention matrix, it learned a matrix of embeddings that represented the relative event positions along with their order. It added the two matrices when calculating attention.

- The decoder comprised three stacked transformers, each of which generated a sentence that described each event. It also produced a confidence score for each description (the average probability per word), which helped successive transformers to refine the descriptions.

- During training, one term of the loss function encouraged the system to generate descriptions similar to the ground-truth descriptions. Another term encouraged it to minimize the difference between the encoder’s representation of masked and unmasked versions of an event.

Results: The authors compared Reasoner to the best competing method, PDVC, a video captioner trained to perform their task. Three human volunteers evaluated the generated descriptions of masked events in 500 test-set examples drawn at random. Evaluating the descriptions of masked events, the evaluators preferred Reasoner in 29.9 percent of cases, preferred PDVC in 10.4 percent of cases, found them equally good in 13.7 percent of cases, and found them equally bad in 46.0 percent of cases. The authors also pitted Reasoner’s output against descriptions of masked events written by humans. The evaluators preferred human-generated descriptions in 64.8 percent of cases, found them equally good in 22.1 percent of cases, found them equally bad in 4.2 percent of cases, and preferred Reasoner in 8.9 percent of cases.

Why it matters: Reasoning over events in video is impressive but specialized. However, many NLP practitioners can take advantage of the authors’ innovation in using transformers to process text representations. A decoder needs only one transformer to produce descriptions, but the authors improved their descriptions by stacking transformers and using the confidence of previous transformers to help the later ones refine their output.

We’re thinking: Given a context, transformer-based text generators often stray from it — sometimes to the point of spinning wild fantasies. This work managed to keep transformers focused on a specific sequence of events, to the extent that they could fill in missing parts of the sequence. Is there a lesson here for keeping transformers moored to reality?

AI’s Year in Review

2022 was a big year for AI-driven upstarts and scientific discovery, according to a new survey.

What’s new: The fifth annual State of AI Report details the biggest breakthroughs, business impacts, social trends, and safety concerns.

Looking back: Investors Nathan Benaich and Ian Hogarth reviewed research papers, industry surveys, financial reports, funding news, and more. Among their key findings:

- Small AI had an outsized impact. Startups like Stability AI, which was founded in 2019 and already is valued at $1 billion, and upstart research collaborations like BigScience, the group behind the open source BLOOM large language model, are bucking expectations that tech giants would crowd smaller competitors out of AI innovation. The trend is compounded by an exodus of researchers from places like OpenAI, DeepMind, and Meta, many of whom are joining or launching AI startups.

- AI drove scientific breakthroughs in biochemistry, materials science, mathematics, and nuclear physics. However, a staggering portion of such research suffers from issues like data leakage (when identical or overly similar examples appear in both training and test sets) and overfitting. Such flaws have prompted fears that AI-driven science is mired in a crisis of reproducibility.

- Researchers gave serious attention to safety concerns like harmful output, undesirable social impact, and uncontrolled artificial general intelligence. In one survey of researchers, 69 percent believed that safety deserves a higher priority (up from 68 percent in a 2021 survey and 49 percent in a 2016 survey).

- China’s research community hit the accelerator. U.S. AI researchers published over 10,000 papers in 2022, up 11 percent from the prior year. Their China-based counterparts published less than 7,500 papers, a 24 percent jump from 2021. U.S. researchers led in natural language processing tasks, while Chinese researchers dominated papers on autonomous vehicles, computer vision, and machine translation.

Looking ahead: The authors offer predictions for the year ahead. Among them:

- DeepMind will train a 10 billion-parameter multimodal reinforcement learning model, an order of magnitude greater than the company’s 1.2 billion parameter Gato.

- At least one of the five biggest tech companies will invest over $1 billion in startups devoted to building artificial general intelligence.

- Nvidia’s dominance in AI chips will cause at least one of their high-profile startup competitors to be acquired for less than half its current value or shuttered outright.

Hits and misses: The authors also graded their predictions from last year. A sampling:

- Correct: DeepMind would make a major breakthrough in the physical sciences. (The Google division helped publish three papers, including work that found materials’ electron configurations and, consequently, their properties.)

- Incorrect: One or more startups devoted to making specialized AI chips, such as Graphcore, Cerebras, or Mythic, would be acquired by a larger firm.

Why it matters: As investors, the authors earn their bread, butter, and Teslas by developing a keen sense of which tech trends have the greatest commercial value. Their perspective may not be omniscient, but it can be helpful to know what the funders are betting on.

We’re thinking: We’re not enamored of projections in a field that changes as rapidly as AI, but we’re happy when forecasters take a critical look at their own previous predictions.