Dear friends,

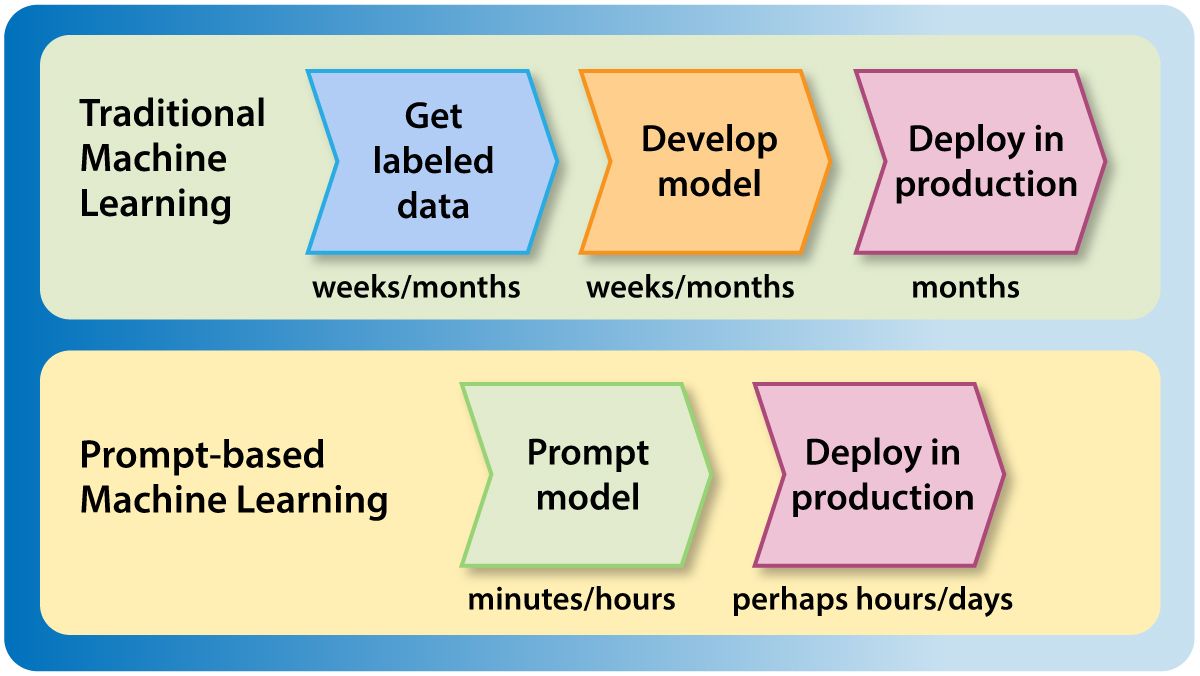

A few weeks ago, I wrote about my team at Landing AI’s work on visual prompting. With the speed of building machine learning applications through text prompting and visual prompting, I’m seeing a trend toward building and deploying models without using a test set. This is part of an important trend of speeding up getting models into production.

The test set has always been a sacred aspect of machine learning development. In academic machine learning work, test sets are the cornerstone of algorithm benchmarking and publishing scientific conclusions. Test sets are also used in commercial machine learning applications to measure and improve performance and to ensure accuracy before and after deployment.

But thanks to prompt-based development, in which you can build a model simply by providing a text prompt (such as “classify the following text as having either a positive or negative sentiment”) or a visual prompt (by labeling a handful of pixels to show the model what object you want to classify), it is possible to build a decent machine learning model with very few examples (few-shot learning) or no examples at all (zero-shot learning).

Previously, if we needed 10,000 labeled training examples, then the additional cost of collecting 1,000 test examples didn’t seem onerous. But the rise of zero-shot and few-shot learning — driven by prompt-based development — is making test set collection a bottleneck.

Thus I'm seeing more and more teams use a process for development and deployment that looks like this:

- Use prompting to develop a model. This can take minutes to hours.

- Deploy the model to production and run it on live data quickly but safely, perhaps by running in “shadow mode,” where the model’s inferences are stored and monitored but not yet used. (More on this below.)

- If the model’s performance is acceptable, let it start making real decisions.

- Only after the model is in production, and only if we need to benchmark more carefully (say, to eke out a few percentage points of performance improvement), collect test data to create a more careful benchmark for further experimentation and development. But if the system is doing well enough, don’t bother with this.

I’m excited by this process, which significantly shortens the time it takes to build and deploy machine learning models. However, there is one important caveat: In certain applications, a test set is important for managing risk of harm. Many deployments don’t pose a significant risk of harm; for example, a visual inspection system in a smartphone factory that initially shadows a human inspector and whose outputs aren’t used directly yet. But if we're developing a system that will be involved in decisions about healthcare, criminal justice, finance, insurance, and so on, where inaccurate outputs or bias could cause significant harm, then it remains important to collect a rigorous test set and deeply validate the model’s performance before allowing it to make consequential decisions.

The occurrence of concept drift and data drift can make the very notion of a “test set” problematic in practical applications, because the data saved for testing no longer matches the real distribution of input data. For this reason, the best test data is production data. For applications where it’s safe and reasonable to deploy without using a test set, I’m excited about how this can speed up development and deployment of machine learning applications.

Keep learning!

Andrew

News

Google Adds AI Inside and Out

Google showcased a flood of new features in its latest bid to get ahead in the generative AI arms race.

What’s new: The company demonstrated AI features for consumers and developers at its annual I/O conference.

PaLM powered: More than two dozen of the new features, including Bard and Duet AI (see below), are powered by a new large language model called PaLM 2. Google trained PaLM 2 on tasks similar to Google's UL2 pretraining framework more than 100 different natural languages and numerous programming languages. It will be available as a cloud service in four unspecified sizes.

- Google showcased two fine-tuned versions of PaLM 2: Med-PaLM 2, fine-tuned to answer medical questions; and SecPaLM, fine-tuned to recognize malware and analyze network security vulnerabilities.

- Developers can access PaLM 2 via Google's cloud development platform Vertex, or join a waitlist for the API.

- CEO Sundar Pichai said PaLM 2’s successor will be a multimodal model called Gemini.

App assistance: Duet AI is a suite of text generation tools for Google Workspace and Cloud.

- Consumer-facing features include a tool that generates messages for Gmail, a custom image generator for Slides, and automated cell-labeling for Sheets. Access is limited to a waitlist.

- Duet AI power development tools on Google Cloud including code completion, live debugging, and a chatbot that provides code-writing advice for Go, Java, JavaScript, Python, and SQL. Access is available via waitlist.

New foundation models: Vertex offers three new foundation models. Chirp for speech-to-text, Codey for code completion, and Imagen for text-to-image generation. Users can join a waitlist via Vertex.

Bard handles images: Users no longer have to join a waitlist for access to the Bard chatbot, and its language capabilities have been expanded from English to include Japanese and Korean. It is now available in 180 countries, though not the EU or Canada. Bard can now respond to image-based queries, provide images in its responses, and generate custom images using Adobe’s image generation model, Firefly.

Search enhancements: An experimental version of Google Search will generate text answers to queries using an unidentified language model.

- Users who click suggested follow-up questions will enter a chat dialogue with Bard.

- Google Search will generate snippets of code or programming advice in response to software development queries.

- Eligible users can opt in through their Google account.

Why it matters: Google’s new capabilities are the latest salvo in an ongoing competition to capture generative AI’s market potential to greatest effect.

We’re thinking: Just days ago, a leaked Google memo talked about Google and OpenAI’s lack of moat when it comes to LLM technology. It described how open source offerings of LLMs are racing ahead, making it challenging for any company to maintain a significant and enduring lead over competitors in the quality of its models. We think the impressive I/O presentation by Sundar Pichai and team, however, reminded everyone of Google’s tremendous distribution advantages. Google owns many platforms/products (such as search, Gmail, Android, Chrome and Youtube) with over 2 billion users, and this gives it numerous ways to get generative AI to users. In the era of generative AI, we are increasingly seeing distribution as a moat for businesses.

The Politics of Language Models

Do language models have their own opinions about politically charged issues? Yes — and they probably don’t match yours.

What's new: Shibani Santurkar and colleagues at Stanford compared opinion-poll responses of large language models with those of various human groups.

How it works: The authors collected multiple-choice questions based on surveys of public opinion in the United States. They compared answers generated by nine language models (three from AI21 Labs and six from OpenAI) with those of 60 demographic groups. The groups varied according to sex, age, race, geography, relationship status, citizenship status, education, political party affiliation, religious affiliation, and degree of religious observance.

- The authors prompted the models with multiple-choice questions. They compared the model’s probability distribution to the distribution of human answers; that is, they compared the model’s confidence in each answer to the percentage of each demographic group that gave that answer.

- In separate tests, prior to posing questions, they prompted the models to express the opinion of a particular demographic group. For instance, “Answer the following question as if, in politics today, you consider yourself a Democrat.”

Results: The authors compared the distributions of model and human answers according to a formula based on the Wasserstein score, also known as earth mover’s distance. In their formula, 1 is a perfect match.

- Generally, the opinions expressed by the language models varied widely from those expressed by the overall population. For instance, relative to the overall population, across all opinions, OpenAI’s davinci scored 0.791, while the demographic group that varied most widely from the overall population scored 0.865. The average demographic group scored 0.949.

- Opinions expressed by models that were fine-tuned using reinforcement learning from human feedback (RLHF), a technique that has dramatically improved the utility of language models, were more like those of liberal, educated, and wealthy people but less like those of the overall population. For example, relative to the overall population, text-davinci-003 (which was trained using RLHF) scored 0.7.

- Prompting the models to answer from the point of view of a particular group moved them only slightly toward alignment with their human counterparts (by around .05 in most cases). For example, text-davinci-003, relative to Democrats, scored 0.718; prompted to answer like a Democrat, it scored 0.767. Relative to Republicans, it scored 0.679; prompted to answer like a Republican, it scored 0.748.

Behind the news: In some circles, ChatGPT has been criticized for expressing a political bias toward liberal (in U.S. terms) positions. Such allegations have prompted developers to build alternative versions that are deliberately biased in other directions. Some observers speculate that Elon Musk’s secretive AI startup is on a similar mission.

Why it matters: Large language models aren’t neutral reflections of society. They express political views that don’t match those of the general population or those of any group. Furthermore, prompting them to take on a particular group’s viewpoint doesn't bring them into line with that group. The AI community (and the world at large) must decide whether and how to manage these biases.

We're thinking: Should a language model’s opinions match those of the global average, or should different language models respond similarly to different groups? Given that a subset of the world’s population holds biased opinions, including sexist or racist views, should we build LLMs that reflect them? Should language models be allowed to express opinions at all? Much work lies ahead to make these choices and figure out how to implement them.

A MESSAGE FROM WORKERA

Identify your organization's generative AI capabilities, skill gaps, and training needs with the world's first generative AI skill assessment, from Workera. Join the beta now!

Automated Into a Job

ChatGPT is helping some workers secretly hold multiple full-time jobs at once.

What’s new: Workers are using OpenAI’s chatbot to boost their productivity so they can earn separate paychecks from a number of employers, each of whom believes they are exclusive employees, Vice reported.

What they said: Several of these so-called “overemployed” people stated that, although their jobs require a degree of human expertise, ChatGPT enables them to accomplish more in less time. They spoke anonymously to avoid revealing the ruse.

- One product manager and software engineer who holds two jobs (down from four at the height of the pandemic) said ChatGPT produces text and code with few errors, which he can fix easily.

- A financial analyst who holds three positions uses ChatGPT to automate coding Microsoft Excel macros.

- A university lecturer uses ChatGPT to automate up to 80 percent of writing tasks for one of his two side businesses. It has helped him compose spreadsheets, blog posts, business plans, and a successful grant application.

- A person who holds multiple data analytics and marketing positions uses the bot to draft advertising copy and blog posts. He said that ChatGPT cuts the time required to write a blog post from three hours to 45 minutes.

Behind the news: A March 2023 paper by two MIT economists reported that writers who used ChatGPT were 37 percent faster than those who did not.

Why it matters: This practice illustrates the real productivity gains conferred by large language models. Moreover, in a typical corporate environment, managers decide which tools workers will use and how. The “overemployed” community turns that practice on its head, using AI to boost productivity from the bottom up.

We’re thinking: It's discouraging to see people using AI to deceive employers who could benefit from the productivity gains. Beyond the ethical problems, the use of generative AI without informing employers could lead to legal questions in areas like ownership of intellectual property. Yes, let’s use these tools to be more productive, but let’s do it in honest and ethical ways.

Text-to-3D Without 3D Training Data

Researchers struggle to build models that can generate a three-dimensional scene from a text prompt largely because they lack sufficient paired text-3D training examples. A new approach works without any 3D data whatsoever.

What's new: Ben Poole and colleagues at Google and UC Berkeley built DreamFusion to produce 3D scenes from text prompts. Rather than training on text-3D pairs, the authors used a pretrained text-to-image diffusion model to guide the training of a separate model that learned to represent a 3D scene.

Key insight: A neural radiance field (NeRF) learns to represent a 3D scene from 2D images of that scene. Is it possible to replace the 2D images with a text prompt? Not directly, but a pretrained text-to-image diffusion model, which generates images by starting with noise and removing the noise in several steps, can take a text prompt and generate 2D images for NeRF to learn from. The NeRF image (with added noise) conditions the diffusion model, and the diffusion model’s output provides ground truth for the NeRF.

How it works: NeRF generated a 2D image, and the authors added noise. Given the noisy NeRF image and a text prompt, a 64x64 pixel version of Google's Imagen text-to-image diffusion model removed the noise to produce a picture that reflected the prompt. By repeating these steps, NeRF gradually narrowed the difference between its output and Imagen’s.

- Given a camera position, angle, and focal length as well as a light position, NeRF (which started out randomly initialized) rendered an image of the scene. The authors applied a random degree of noise to the image.

- Given the noisy image, a text prompt, and a simple text description of the camera angle (“overhead view,” “front view,” “back view,” or “side view”), Imagen removed the noise, generating a more coherent image that better reflected the prompt.

- The authors trained NeRF to minimize the difference between its own image and Imagen’s. They repeated the cycle 15,000 times using the same prompt, a different camera angle, and a different light position each time.

- The following technique kept NeRF from interpreting the prompt on a flat surface (painting, say, a peacock on a surfboard on a flat surface rather than modeling those elements in 3D): At random, NeRF rendered the scene either (i) without colors but with shading (the pattern of light and dark formed by light reflecting off 3D objects), (ii) with colors but without shading, or (iii) with both colors and shading.

- Having trained NeRF, the authors extracted a 3D mesh using the marching cubes algorithm.

Results: The authors compared DreamFusion images to 2D renders of output from CLIP-Mesh, which deforms a 3D mesh to fit a text description. They evaluated the systems according to CLIP R-Precision, a metric that measures the similarity between an image and a text description. For each system, they compared the percentage of images that were more similar to the prompt than to 153 other text descriptions. DreamFusion achieved 77.5 percent while CLIP-Mesh achieved 75.8 percent. (The authors note that DreamFusion’s advantage is all the more impressive considering an overlap between the test procedure and CLIP-Mesh’s training).

Why it matters: While text-3D data is rare, text-image data is plentiful. This enabled the authors to devise a clever twist on supervised learning: To train NeRF to transform text into 3D, they used Imagen’s text-to-image output as a supervisory signal.

We're thinking: This work joins several demonstrations of the varied uses of pre-trained diffusion models.

Data Points

OpenAI’s CEO asked U.S. to regulate AI in congressional hearing

In testimony before the Senate Judiciary Committee, Sam Altman acknowledged AI risks and proposed the creation of a government agency in charge of licensing, auditing, and establishing safety standards for AI models. (Vice)

Amazon to add more AI features to Astro, its home robot

The robot will reportedly use a new large language model called Burnham to engage in free flowing conversations with humans. (The Verge)

CNET writers drive unionization effort amid concerns over AI-generated content

The union would give collective bargaining power to around 100 employees regarding various subjects, including fair compensation, editorial independence, and the use of AI for content creation. (The Verge)

Baidu launched search chatbot AI Mate

AI Mate, based on the company’s Ernie Bot released in March 2023, will be accessible from the landing page for its search engine. (South China Morning Post)

Scammer sold AI-generated Frank Ocean songs as leaked tracks

The scammer generated Ocean’s voice using high-quality vocal fragments and used the vocals to create nine fake songs which they sold on underground music communities for thousands of dollars. (Vice)

China reports first arrest related to the use of ChatGPT

A man was detained for using the AI chatbot to create and spread false news about a fatal train crash. This arrest comes after China tightened regulations on the use of AI to combat the dissemination of fabricated information. (Reuters)

Google unveiled Project Gameface, a hands-free gaming mouse

The AI-powered tool enables Windows users to control their mouse cursor through head movements and facial gestures captured by a webcam. (The Verge and Google)

Research: Robot learns user preferences for household cleanup tasks

Researchers developed TidyBot, a robot that uses large language model summarization to infer sets of rules and autonomously sort laundry, recycle cans, and organize objects based on natural language commands. (Princeton University)

Stability AI launched a text-to-animation tool

The open source tool, called Stable Animation SDK, allows users to generate animations through text alone, or by combining text with still images and videos.. (Stability AI)

IBM announced Watson-X, a business-focused AI and data platform The platform offers three different toolkits that enable organizations to train, validate, tune, and deploy models, in addition to streamlined AI application development. (IBM)