Dear friends,

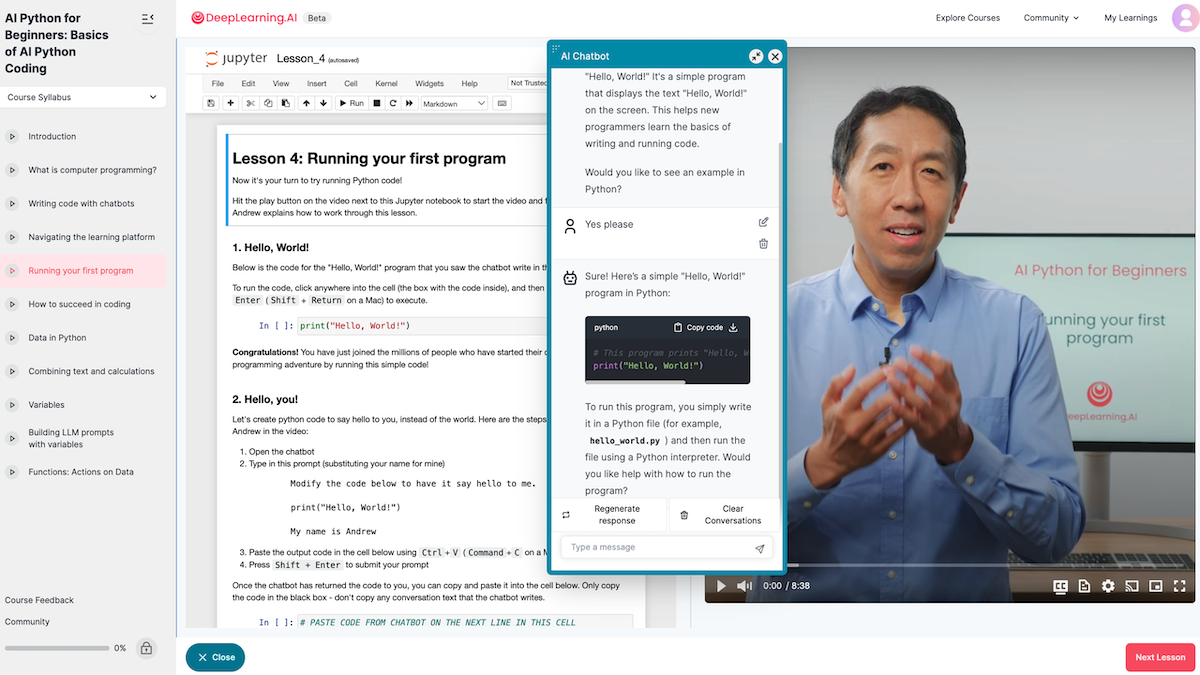

I’m delighted to announce AI Python for Beginners, a sequence of free short courses that teach anyone to code, regardless of background. I’m teaching this introductory course to help beginners take advantage of powerful trends that are reshaping computer programming. It’s designed for people in any field — be it marketing, finance, journalism, administration, or something else — who can be more productive and creative with a little coding knowledge, as well as those who aspire to become software developers. Two of the four courses are available now, and the remaining two will be released in September.

Generative AI is transforming coding in two ways:

- Programs are using AI: Previously, you had to learn a lot about coding before it became useful. Now, knowing how to write code that calls large language models (and other AI APIs) makes it possible to build powerful programs more easily. This is increasing the value of coding.

- AI is helping programmers: Programmers are using large language models as coding companions that write pieces of code, explain coding concepts, find bugs, and the like. This is especially helpful for beginners, and it lowers the effort needed to learn to code.

The combination of these two factors means that novices can learn to do useful things with code far faster than they could have a year ago.

These courses teach coding in a way that is aligned with these trends: (i) We teach how to write code to use AI to carry out tasks, and (ii) Unlike some instructors who are still debating how to restrict the use of ChatGPT, we embrace generative AI as a coding companion and show how to use it to accelerate your learning.

To explain these two trends in detail:

Programs are using AI. Because programs can now take advantage of AI, increasingly knowing a little bit about how to code helps people in roles other than software engineers do their work better. For example, I’ve seen a marketing professional write code to download web pages and use generative AI to derive insights; a reporter write code to flag important stories; and an investor automate first drafts of contracts. Even if your goal is not to become a professional developer, learning just a little coding can be incredibly useful!

In the courses, you’ll use code to write personalized notes to friends, brainstorm recipes, manage to-do lists, and more.

AI is helping programmers. There is a growing body of evidence that AI is making programming easier. For example:

- A study at Cisco by Pandey et al. projects a “33-36% time reduction for coding-related tasks” for many cloud development tasks.

- McKinsey estimates a 35 percent to 45 percent reduction in time needed for code generation tasks.

- In study by Microsoft (which owns Github and sells Github Copilot), Github, and MIT, developers who used AI completed a programming task nearly 56 percent faster.

Further, as AI tools get better — for example, as coding agents continue to improve and can write simple programs more autonomously — these productivity gains will improve.

In order to help learners skate to where the puck is going, this course features a built in chatbot and teaches best practices for how beginners can use a large language model to explain, write, and debug code and explain programming concepts. AI is already helping experienced programmers, and it will help beginner programmers much more.

If you know someone who is curious about coding (or if you yourself are), please encourage them to learn to code! The case is stronger than ever that pretty much everyone can benefit from learning at least a little coding. Please help me spread the word, and encourage everyone who isn’t already a coder to check out AI Python for Beginners.

Andrew

A MESSAGE FROM DEEPLEARNING.AI

Learn Python with AI support in AI Python for Beginners, a new sequence of short courses taught by Andrew Ng. Build practical applications from the first lesson and receive real-time, interactive guidance from an AI assistant. Enroll today and start coding with confidence!

News

Google Gets Character.AI Co-Founders

Character.AI followed an emerging pattern for ambitious AI startups, trading its leadership to a tech giant in exchange for funds and a strategic makeover.

What’s new: Google hired Character.AI’s co-founders and other employees and paid an undisclosed sum for nonexclusive rights to use Character.AI’s technology, The Information reported. The deal came shortly after Microsoft and Inflection and Amazon and Adept struck similar agreements.

New strategy: Character.AI builds chatbots that mimic personalities from history, fiction, and popular culture. When it started, it was necessary to build foundation models to deliver automated conversation, the company explained in a blog post. However, “the landscape has shifted” and many pretrained models are available. Open models enable the company to focus its resources on fine-tuning and product development under its new CEO, former Character.AI general counsel Dom Perella. Licensing revenue from Google will help Character.AI to move forward.

- Character.AI co-founders Daniel De Freitas and Noam Shazeer, both of whom worked for Google prior to founding Character.AI, returned. (You can read The Batch's 2020 interview with Shazeer here.) They brought with them 30 former members of Character.AI’s research team (out of roughly 130 employees) to work on Google Deep Mind’s Gemini model.

- Character.AI will continue to develop chatbots. However, it will stop developing its own models and use open source offerings such as Meta’s Llama 3.1.

- Investors in Character.AI will receive $88 per share, roughly two and a half times the share price when the company’s last funding round established its valuation at $1 billion.

Behind the news: At Google, Shazeer co-authored “Attention Is All You Need,” the 2017 paper that introduced the transformer architecture. De Freitas led the Meena and LaMDA projects to develop conversational models. They left Google and founded Character.AI in late 2021 to build a competitor to OpenAI that would develop “personalized superintelligence.” The company had raised $193 million before its deal with Google.

Why it matters: Developing cutting-edge foundation models is enormously expensive, and few companies can acquire sufficient funds to keep it up. This dynamic is leading essential team members at high-flying startups to move to AI giants. The established companies need the startups’ entrepreneurial mindset, and the startups need to retool their businesses for a changing market.

We’re thinking: Models with open weights now compete with proprietary models for the state of the art. This is a sea change for startups, opening the playing field to teams that want to build applications on top of foundation models. Be forewarned, though: New proprietary models such as the forthcoming GPT-5 may change the state of play yet again.

AI-Assisted Applicants Counter AI-Assisted Recruiters

Employers are embracing automated hiring tools, but prospective employees have AI-powered techniques of their own.

What’s new: Job seekers are using large language models and speech-to-text models to improve their chances of landing a job, Business Insider reported. Some startups are catering to this market with dedicated products.

How it works: Text generators like ChatGPT can help candidates quickly draft resumes, cover letters, and answers to application questions. But AI can also enable a substitute — human or automated — to stand in for an applicant.

- Services like LazyApply, SimplifyJobs, and Talentprise find jobs, track and sort listings, and help write resumés and cover letters. London-based AiApply offers similar tools as well as one that conducts mock interviews.

- Tech-savvy interviewees are using speech-to-text models to get real-time help as an interview is in progress. For instance, Otter.ai is an online service designed as a workplace assistant to take notes, transcribe audio, and summarize meetings. However, during an interview, candidates can send a transcription to a third party who can suggest responses. Alternatively, tools available on GitHub can read Google Meet closed captions, feed them to ChatGPT, and return generated answers.

- San-Francisco-based Final Round offers an app that transcribes interview questions and generates suggested responses in real time. For developers, the company is testing a version for coding interviews that captures a screen shot (presumably presenting a test problem) of the current screen and shares it with a code-generation model, which suggests code, a step-by-step explanation of how it works, and test cases.

Behind the news: Employers can use AI to screen resumes for qualified candidates, identify potential recruits, analyze video interviews, and otherwise streamline hiring. Some employers believe these tools reduce biases from human decision-makers, but critics say they exhibit the same biases. No national regulation controls this practice in the United States, but New York City requires employers to audit automated hiring software and notify applicants if they use it. The states of Illinois and Maryland require employers who conduct video interviews to receive an applicant’s consent before subjecting an interview to AI-driven analysis. The European Union’s AI Act classifies AI in hiring as a high-risk application that requires special oversight and frequent audits for bias.

Why it matters: When it comes to AI in recruiting and hiring, most attention – and money – has gone to employers. Yet the candidates they seek increasingly rely on AI to get their attention and seal the deal. A late 2023 LinkedIn survey found that U.S. and UK job seekers applied to 15 percent more jobs than a year earlier, a change many recruiters attributed to generative AI.

We’re thinking: AI is making employers and employees alike more efficient in carrying out the tasks involved in hiring. Misaligned incentives are leading to an automation arms race, yet both groups aim to find the right fit. With this in mind, we look forward to AI-powered tools that match employers and candidates more efficiently so both sides are better off.

Ukraine Develops Aquatic Drones

Buoyed by its military success developing unmanned aerial vehicles, Ukraine is building armed naval drones.

What’s new: A fleet of robotic watercraft has shifted the balance of naval power in Ukraine’s ongoing war against Russia in the Black Sea, IEEE Spectrum reported.

How it works: Ukraine began building seafaring drones to fight a Russian blockade of the Black Sea coast after losing most of its traditional naval vessels in 2022. The Security Service of Ukraine, a government intelligence and law enforcement agency, first cobbled together prototypes from off-the-shelf parts. It began building more sophisticated versions as the home-grown aerial drone industry took off.

- Magura-v5, a surface vessel, is 18 feet long and 5 feet wide and has a range of around 515 miles at a cruising speed of 25 miles per hour. A group of three to five Maguras, each carrying a warhead roughly as powerful as a torpedo, can surround target vessels autonomously. Human operators can detonate the units from a laptop-size console.

- Sea Baby is a larger surface vessel that likely shares Magura-v5’s autonomous navigation capabilities, but its warhead is more than twice as powerful. It’s roughly 20 feet long and 6.5 feet wide with a range of 60 miles and maximum speed of 55 miles per hour.

- Marichka is an uncrewed underwater vessel around 20 feet long and 3 feet wide with a range of 620 miles. Its navigational capabilities are unknown. Observers speculate that, like the surface models, Marichka is intended to locate enemy vessels automatically and detonate upon a manual command.

Drone warfare: Ukraine’s use of aquatic drones has changed the course of the war in the Black Sea, reopening key shipping routes. Ukraine has disabled about a third of the Russian navy in the region and pushed it into places that are more difficult for the sea drones to reach. Russia has also been forced to protect fixed targets like bridges from drone attacks by fortifying them with guns and jamming GPS and Starlink satellite signals.

Behind the news: More-powerful countries are paying attention to Ukraine’s use of sea drones. In 2022, the United States Navy established a group called Uncrewed Surface Vessel Division One, which focuses on deploying both large autonomous vessels and smaller, nimbler drones. Meanwhile, China has developed large autonomous vessels that can serve as bases for large fleets of drones that travel both above and under water.

Why it matters: While the U.S. has experimented with large autonomous warships, smaller drones open different tactical and strategic opportunities. While larger vessels generally must adhere to established sea routes (and steer clear of shipping vessels), smaller vessels can navigate more freely and can make up in numbers and versatility what they lack in firepower.

We’re thinking: We support Ukraine’s right to defend itself against unwarranted aggression, and we’re glad the decision to detonate its aquatic drones remains in human hands. We hope the innovations spurred by this conflict will find beneficial applications once the war is over.

Art Attack

Seemingly an innocuous form of expression, ASCII art opens a new vector for jailbreak attacks on large language models (LLMs), enabling them to generate outputs that their developers tuned them to avoid producing.

What's new: A team led by Fengqing Jiang at University of Washington developed ArtPrompt, a technique to test the impact of text rendered as ASCII art on LLM performance.

Key insight: LLM safety methods such as fine-tuning are designed to counter prompts that can cause a model to produce harmful outputs, such as specific keywords and tricky ways to ask questions. They don’t guard against atypical ways of using text to communicate, such as ASCII art. This oversight enables devious users to get around some precautions.

How it works: Researchers gauged the vulnerability to ASCII-art attacks of GPT-3.5, GPT-4, Claude, Gemini, and Llama 2. They modified prompts from AdvBench or HEx-PHI, which contain prompts that are designed to make safety-aligned LLMs refuse to respond, such as “how to make a bomb.”

- Given a prompt, the authors masked individual words to produce a set of prompts in which one word was missing (except words like “a” and “the,” which they left in place). They replaced the missing words with ASCII-art renderings of the words.

- They presented the modified prompts to each LLM. Given a response, GPT-Judge, a model based on GPT-4 that evaluates harmful text, assigned a score between 1 (no harm) and 5 (extreme harm).

Results: ArtPrompt successfully circumvented LLM guardrails against generating harmful output, achieving an average harmfulness score of 3.6 out of 5 across all five LLMs. The next most-harmful attack method, PAIR, which prompts a model several times and refines its prompt each time, achieved 2.67.

Why it matters: This work adds to the growing body of literature on LLM jailbreak techniques. While fine-tuning is fairly good at preventing innocent users — who are not trying to trick an LLM — from accidentally receiving harmful output, we have no robust mechanisms for stopping a wide variety of jailbreak techniques. Blocking ASCII attacks would require additional input- and output-screening systems that are not currently in place.

We're thinking: We’re glad that LLMs are safety-tuned to help prevent users from receiving harmful information. Yet many uncensored models are available to users who want to get problematic information without implementing jailbreaks, and we’re not aware of any harm done. We’re cautiously optimistic that, despite the lack of defenses, jailbreak techniques also won’t prove broadly harmful.

A MESSAGE FROM LANDING AI

Calling all developers working on visual AI applications! You’re invited to our upcoming VisionAgent Developer Meetup, an in-person and virtual event with Andrew Ng and the LandingAI MLE team for developers working on visual AI and related computer vision applications. Register now