Dear friends,

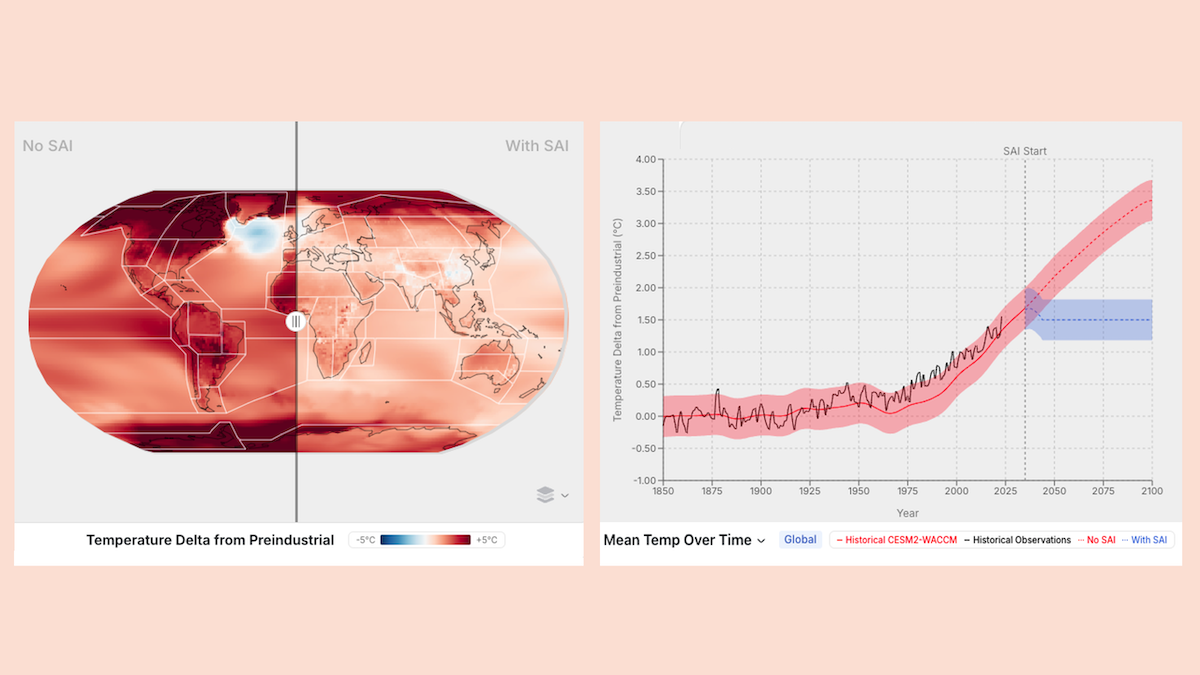

Greetings from Davos, Switzerland! Many business and government leaders are gathered here again for the annual World Economic Forum to discuss tech, climate, geopolitics, and economic growth. While the vast majority of my conversations have been on AI business implementations and governance, I have also been speaking about our latest AI climate simulator and about geoengineering. After speaking about geoengineering onstage at multiple events to a total of several hundred people, I’ve been pleasantly surprised by almost uniformly positive reactions. You can play with our simulator here.

Here’s why I think we should seriously consider geoengineering: The world urgently needs to reduce carbon emissions, but it hasn’t happened fast enough. Given recent emission trends, without geoengineering, there’s no longer any plausible path to keeping global warming to the 1.5 degrees Celsius goal set by the Paris agreement. Under reasonable assumptions, we are on a path to 2.5 degrees of warming or worse. We might be in for additional abrupt changes if we hit certain tipping points.

If you tilt a four-legged chair by a few degrees, it will fall back onto its four legs. But if you tip it far enough — beyond its “tipping point” — it will fall over with a crash. Climate tipping points are like that, where parts of our planet, warmed sufficiently, might reach a point where the planet reorganizes abruptly in a way that is impossible to reverse. Examples include a possible melting of the Arctic permafrost, which would release additional methane (a potent greenhouse gas), or a collapse of ocean currents that move warm water northward from the tropics (the Atlantic Meridional Overturning Circulation).

Keeping warming low will significantly lower the risk of hitting a tipping point. This is why the OECD’s report states, “the existence of climate system tipping points means it is vital to limit the global temperature increase to 1.5 degrees C, with no or very limited overshoot.”

The good news is that geoengineering keeps the 1.5 degree goal alive. Spraying reflective particles into the atmosphere — an idea called Stratospheric Aerosol Injection (SAI) — to reflect 1% of sunlight back into space would get us around 1 degree Celsius of cooling.

Now, there are risks to doing this. For example, just as global warming has had uneven regional effects, the global cooling impact will also be uneven. But on average, a planet with 1.5 degrees of warming would be much more livable than one with 2.5 degrees (or more). Further, after collaborating extensively with climate scientists on AI climate models and examining the output of multiple such models, I believe the risks associated with cooling down our planet will be much lower than the risks of runaway climate change.

I hope we can build a global governance structure to decide collectively whether, and if so to what extent and how, to implement geoengineering. For example, we might start with small scale experiments (aiming for <<0.1 degrees of cooling) that are easy to stop/reverse at any time. Further, there is much work to be done to solve difficult engineering challenges, such as how to build and operate a fleet of aircraft to efficiently lift and spray reflective particles at the small particle sizes needed.

Even as I have numerous conversations about AI business and governance here at the World Economic Forum, I am glad that AI climate modeling is helpful for addressing global warming. If you are interested in learning more about geoengineering, I encourage you to play with our simulator at planetparasol.ai.

I am grateful to my collaborators on the simulator work: Jeremy Irvin, Jake Dexheimer, Dakota Gruener, Charlotte DeWald, Daniele Visioni, Duncan Watson-Parris, Douglas MacMartin, Joshua Elliott, Juerg Luterbacher, and Kion Yaghoobzadeh.

Keep learning!

Andrew

A MESSAGE FROM DEEPLEARNING.AI

Explore Computer Use, which enables AI assistants to navigate, use, and accomplish tasks on computers. Taught by Colt Steele, this free course covers Anthropic’s model family, its approach to AI research, and capabilities like multimodal prompts and prompt caching. Sign up for free

News

DeepSeek Sharpens Its Reasoning

A new open model rivals OpenAI’s o1, and it’s free to use or modify.

What’s new: DeepSeek released DeepSeek-R1, a large language model that executes long lines of reasoning before producing output. The code and weights are licensed freely for commercial and personal use, including training new models on R1 outputs. The paper provides an up-close look at the training of a high-performance model that implements a chain of thought without explicit prompting. (DeepSeek-R1-lite-preview came out in November with fewer parameters and a different base model.)

Mixture of experts (MoE) basics: The MoE architecture uses different subsets of its parameters to process different inputs. Each MoE layer contains a group of neural networks, or experts, preceded by a gating module that learns to choose which one(s) to use based on the input. In this way, different experts learn to specialize in different types of examples. Because not all parameters are used to produce any given output, the network uses less energy and runs faster than models of similar size that use all parameters to process every input.

How it works: DeepSeek-R1 is a version of DeepSeek-V3-Base that was fine-tuned over four stages to enhance its ability to process a chain of thought (CoT). It’s a mixture-of-experts transformer with 671 billion total parameters, 37 billion of which are active at any given time, and it processes 128,000 tokens of input context. Access to the model via DeepSeek’s API costs $0.55 per million input tokens ($0.14 for cached inputs) and $2.19 per million output tokens. (In comparison, o1 costs $15 per million input tokens, $7.50 for cached inputs, and $60 per million output tokens.)

- The team members fine-tuned DeepSeek-V3-Base on a synthetic dataset of thousands of long-form CoT examples that were generated using multiple techniques. For instance, they prompted DeepSeek-V3-Base few-shot style with long CoTs as examples, prompted that model to generate detailed answers while evaluating and double-checking its own CoT steps, and hired human annotators to refine and process the results.

- They used group relative policy optimization, a reinforcement learning algorithm, to improve the model’s ability to solve challenging problems. For example, for math problems, they created rule-based systems that rewarded the model for returning the final answer in a particular format (an accuracy reward) and for showing its internal CoT steps within <think> tags (a format reward).

- For further fine-tuning, they used the in-progress versions of R1 to generate around 600,000 responses to reasoning prompts, retaining only correct responses. They mixed in another 200,000 non-reasoning examples (such as language translation pairs) either generated by DeepSeek-V3-base or from its training dataset.

- They fine-tuned the model using a final round of reinforcement learning. This step encouraged the model to further boost its accuracy on reasoning problems while generally improving its helpfulness and harmlessness.

Other models: DeepSeek researchers also released seven related models.

- DeepSeek-R1-Zero is similar to DeepSeek-R1, but fine-tuned entirely using reinforcement learning. The researchers note that DeepSeek-R1-Zero was able to develop problem-solving strategies simply by being given incentives to do so. However, it was more likely to mix languages and produce unreadable outputs.

- DeepSeek also released six dense models (with parameter counts of 1.5 billion, 7 billion, 8 billion, 14 billion, 32 billion, and 70 billion), four of them based on versions of Qwen, and two based on versions of Llama.

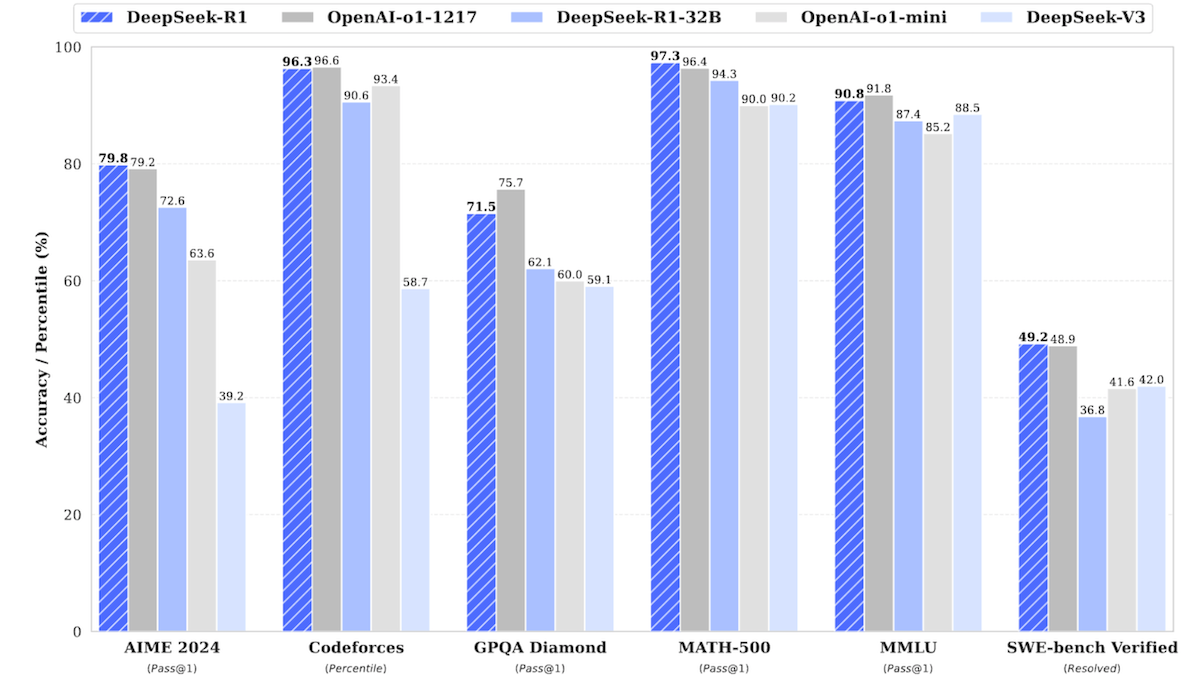

Results: In DeepSeek’s tests, DeepSeek-R1 went toe-to-toe with o1, outperforming that model on 5 of 11 of the benchmarks tested. Some of the other new models showed competitive performance, too.

- DeepSeek-R1 topped o1 on AIME 2024, MATH-500, and SWE-Bench Verified, while turning in competitive performance on Codeforces, GPQA Diamond, and MMLU. For instance, on LiveCodeBench, which includes coding problems that are frequently updated, it solved 65.9 percent of problems correctly, while o1 solved 63.4 percent correctly.

- It also outperformed two top models that don’t implement chains of thought without explicit prompting. It bested Anthropic Claude 3.5 Sonnet on 19 of 21 benchmarks and OpenAI GPT-4o on 20 of 21 benchmarks.

- In DeepSeek’s tests, DeepSeek-R1-Distill-Qwen-32B outperforms OpenAI-o1-mini across all benchmarks tested including AIME 2024 and GPQA Diamond, while DeepSeek-R1-Distill-Llama-70B beats o1-mini on all benchmarks tested except Codeforces.

Why it matters: Late last year, OpenAI’s o1 kicked off a trend toward so-called reasoning models that implement a CoT without explicit prompting. But o1 and o3, its not-yet-widely-available successor, hide their reasoning steps. In contrast, DeepSeek-R1 bares all, allowing users to see the steps the model took to arrive at a particular answer. DeepSeek’s own experiments with distillation show how powerful such models can be as teachers to train smaller student models. Moreover, they appear to pass along some of the benefits of their reasoning skills, making their students more accurate.

We’re thinking: DeepSeek is rapidly emerging as a strong builder of open models. Not only are these models great performers, but their license permits use of their outputs for distillation, potentially pushing forward the state of the art for language models (and multimodal models) of all sizes.

Humanoid Robot Price Break

Chinese robot makers Unitree and EngineAI showed off relatively low-priced humanoid robots that could bring advanced robotics closer to everyday applications.

What’s new: At the annual Consumer Electronics Show (CES) in Las Vegas, Unitree showed its G1 ($16,000 with three-finger hands, $21,000 with five-finger, articulated hands), which climbed stairs and navigated around obstacles. Elsewhere on the show floor, EngineAI’s PM01 ($13,700 through March 2025 including articulated hands) and SE01 (price not yet disclosed) marched among attendees with notably naturalistic gaits.

How it works: Relatively small and lightweight, these units are designed for household and small-business uses. They’re designed for general-purpose tasks and to maintain stability and balance while walking on varied terrain.

- Unitree: A downsized version of Unitree’s 6-foot H1, which debuted in 2023, the G1 stands at 4 feet, 3 inches and weighs 77 pounds. It walks at speeds up to 4.4 miles per hour and carries up to 5 pounds, and demo videos show it performing tasks that require manual dexterity such as cracking eggs. It was trained via reinforcement learning to avoid obstacles, climb stairs, and jump. A rechargeable, swappable battery ($750) lasts two hours. Unitree offers four models that are programmable (in Python, C++, or ROS) and outfitted with Nvidia Jetson Orin AI accelerators ($40,000 to $68,000). All models can be directed with a radio controller.

- EngineAI: The PM01 is slightly larger and heavier than the G1 at 4 feet, 5 inches and 88 pounds. The SE01 is 5 feet, 7 inches and 121 pounds. Both units travel at 4.4 miles per hour and include an Nvidia Jetson Orin AI accelerator. They were trained via reinforcement learning to navigate dynamic environments and adjust to specific requirements. Pretrained AI models enhance their ability to recognize gestures and interact through voice commands. They include built-in obstacle avoidance and path-planning capabilities to operate in cluttered or unpredictable spaces. The robot can be controlled using voice commands or a touchscreen embedded in its chest. Rechargeable, swappable batteries provide two hours of performance per charge.

Behind the news: In contrast to the more-affordable humanoid robots coming out of China, U.S. companies like Boston Dynamics, Figure AI, and Tesla tend to cater to industrial customers. Tesla plans to produce several thousand of its Optimus ($20,000 to $30,000) humanoids in 2025, ramping to as many as 100,000 in 2026. Figure AI has demonstrated its Figure 02 ($59,000) in BMW manufacturing plants, showing a 400 percent speed improvement in some tasks. At CES, Nvidia unveiled its GR00T Blueprint, which includes vision-language models and synthetic data for training humanoid robots, and said its Jetson Thor computer for humanoids would be available early 2025.

Why it matters: China’s push into humanoid robotics reflects its broader national ambitions. Its strength in hardware has allowed it to establish a dominant position in drones, and humanoid robots represent a new front for competition. China’s government aims to achieve mass production of humanoid robots by 2025 and establish global leadership by 2027, partly to address projected labor shortages of 30 million workers in manufacturing alone. Lower price points for robots that can perform arbitrary tasks independently could be valuable in elder care and logistics, offering tools for repetitive or physically demanding tasks.

We’re thinking: Although humanoid robots generate a lot of excitement, they’re still in an early stage of development, and businesses are still working to identify and prove concrete use cases. For many industrial applications, wheeled robots — which are less expensive, more stable, and better able to carry heavy loads — will remain a sensible choice. But the prospect of machines that look like us and fit easily into environments built for us is compelling.

Texas Moves to Regulate AI

Lawmakers in the U.S. state of Texas are considering stringent AI regulation.

What’s new: The Texas legislature is considering the proposed Texas Responsible AI Governance Act (TRAIGA). The bill would prohibit a short list of harmful or invasive uses of AI, such as output intended to manipulate users. It would impose strict oversight on AI systems that contribute to decisions in key areas like health care.

How it works: Republican House Representative Giovanni Capriglione introduced TRAIGA, also known as HB 1709, to the state legislature at the end of 2024. If it’s passed and signed, the law would go into effect in September 2025.

- The proposed law would apply to any company that develops, distributes, or deploys an AI system while doing business in Texas, regardless of where the company is headquartered. It makes no distinction between large and small models or research and commercial uses. However, it includes a modest carve-out for independent small businesses that are based in the state.

- The law controls “high-risk” AI systems that bear on consequential decisions in areas that include education, employment, financial services, transportation, housing, health care, and voting. The following uses of AI would be banned: manipulating, deceiving, or coercing users; inferring race or gender from biometric data; computing a “social score or similar categorical estimation or valuation of a person or group;” and generating sexually explicit deepfakes. The law is especially broad with respect to deepfakes: It outlaws any system that is “capable of producing unlawful visual material.”

- Companies would have to notify users whenever AI is used. They would also have to safeguard against algorithmic discrimination, maintain and share detailed records of training data and accuracy metrics, assess impacts, and withdraw any system that violates the law until it can achieve compliance.

- The Texas attorney general would investigate companies that build or use AI, file civil lawsuits, and impose penalties up to $200,000 per violation, with additional fines for ongoing noncompliance of $40,000 per day.

- The bill would establish a Texas AI Council that reports to the governor, whose members would be appointed by the governor, lieutenant governor, and state legislative leaders. The council would monitor AI companies, develop non-binding ethical guidelines for them, and recommend new laws and regulations.

Sandbox: A “sandbox” provision would allow registered AI developers to test and refine AI systems temporarily with fewer restrictions. Developers who registered AI projects with the Texas AI Council would gain temporary immunity, even if their systems did not fully comply with the law. However, this exemption would come with conditions: Developers must submit detailed reports on their projects’ purposes, risks, and mitigation plans. The sandbox status would be in effect for 36 months (with possible extensions), and organizations would have to bring their systems into compliance or decommission them once the period ends. The Texas AI Council could revoke sandbox protections if it determined that a project posed a risk of public harm or failed to meet reporting obligations.

Behind the news: Other U.S. states, too, are considering or have already passed laws that regulate AI:

- California SB 1047, aimed to regulate both open and closed models above a specific size. The state’s governor vetoed the proposed bill due to concerns about regulatory gaps and overreach.

- Colorado signed its AI Act into law in 2024. Like the Texas proposal, it mandates civil penalties for algorithmic discrimination in “consequential use of AI.” However, it doesn’t create a government body to regulate AI or outlaw specific uses.

- New York state is considering a bill similar to California SB 1047 but narrower in scope. New York’s proposed bill would focus on catastrophic harms potentially caused by AI models that require more than 1026 FLOPs or cost $100 million or more to train). It would mandate third-party audits and protection for whistleblowers.

Why it matters: AI is not specifically regulated at the national level in the United States. This leaves individual states free to formulate their own laws. However, state-by-state regulation risks a patchwork of laws in which a system — or a particular feature — may be legal in some states but not others. Moreover, given the distributed nature of AI development and deployment, a law that governs AI in an individual state could affect developers and users worldwide.

We’re thinking: The proposed bill has its positive aspects, particularly insofar as it seeks to restrict harmful applications rather than the underlying technology. However, it imposes burdensome requirements for compliance, suffers from overly broad language, fails to adequately protect open source, and doesn’t distinguish between research and commercial use. Beyond that, state-by-state regulation of AI is not workable. On the contrary, AI demands international conventions and standards.

Generated Chip Designs Work in Mysterious Ways

Designing integrated circuits typically requires years of human expertise. Recent work set AI to the task with surprising results.

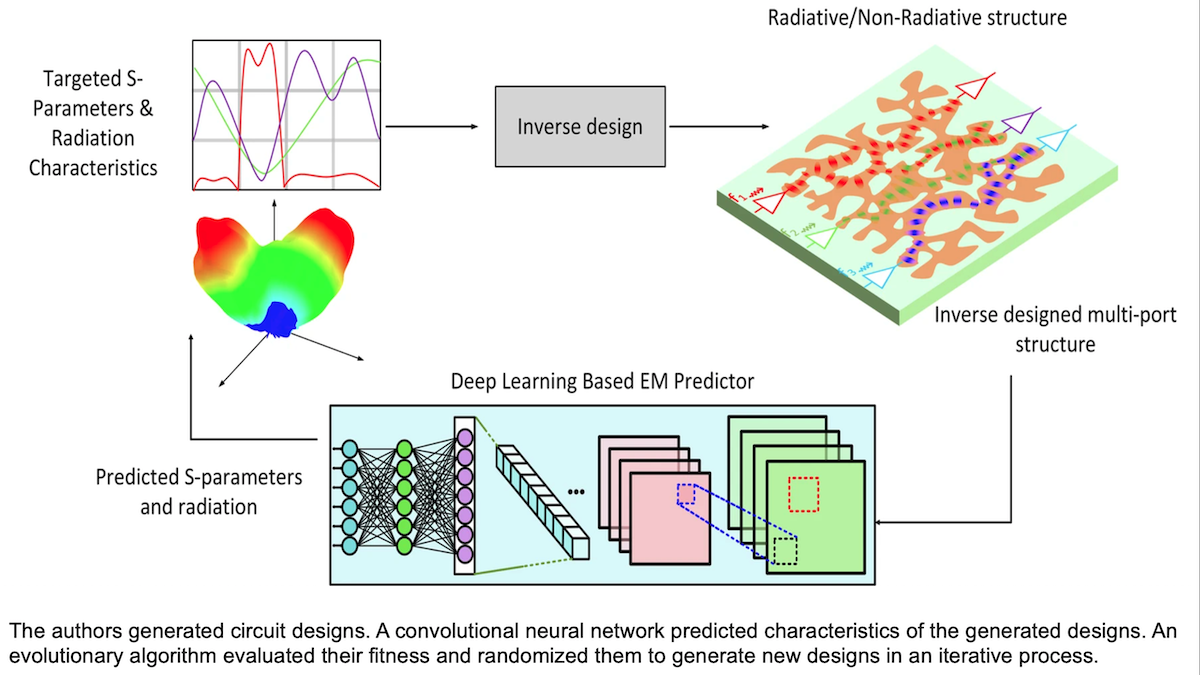

What’s new: Emir Ali Karahan, Zheng Liu, Aggraj Gupta, and colleagues at Princeton and Indian Institute of Technology Madras used deep learning and an evolutionary algorithm, which generates variations and tests their fitness, to generate designs for antennas, filters, power splitters, resonators, and other chips with applications in wireless communications and other applications. They fabricated a handful of the generated designs and found they worked — but in mysterious ways.

How it works: The authors trained convolutional neural networks (CNNs), given a binary image of a circuit design (in which each pixel represents whether the corresponding portion of a semiconductor surface is raised or lowered), to predict its electromagnetic scattering properties and radiative properties. Based on this simulation, they generated new binary circuit images using evolution.

- The authors produced a training set of images and associated properties using Matlab EM Toolbox. The images depicted designs for chip sizes between 200x200 micrometers (which they represented as 10x10 pixels) and 500x500 micrometers (represented as 25x25 pixels).

- They trained a separate CNN on designs of each size.

- They generated 4,000 designs at random and predicted their properties using the appropriate CNN.

- Given the properties, the authors used a tournament method to select the designs whose properties were closest to the desired values. They randomly modified the selected designs to produce a new pool of 4,000 designs, predicted their properties, and repeated the tournament. The number of iterations isn’t specified.

Results: The authors fabricated some of the designs to test their real-world properties. The chips showed similar performance than the CNNs had predicted. The authors found the designs themselves baffling; they “delivered stunning high-performances devices that ran counter to the usual rules of thumb and human intuition,” co-author Uday Khankhoje told the tech news site Tech Xplore. Moreover, the design process was faster than previous approaches. The authors’ method designed a 300x300 micrometer chip in approximately 6 minutes. Using traditional methods it would have taken 21 days.

Behind the news: Rather than wireless chips, Google has used AI to accelerate design of the Tensor Processing Units that process neural networks in its data centers. AlphaChip used reinforcement learning to learn how to position chip components such as SRAM and logic gates on silicon.

Why it matters: Designing circuits usually requires rules of thumb, templates, and hundreds of hours of simulations and experiments to determine the best design. AI can cut the required expertise and time and possibly find effective designs that wouldn’t occur to human designers.

We’re thinking: AI-generated circuit designs could help circuit designers to break out of set ways of thinking and discover new design principles.