Dear friends,

Last month, a drone from Skyfire AI was credited with saving a police officer’s life after a dramatic 2 a.m. traffic stop. Many statistics show that AI impacts billions of lives, but sometimes a story still hits me emotionally. Let me share what happened.

Skyfire AI, an AI Fund portfolio company led by CEO Don Mathis, operates a public safety program in which drones function as first responders to 911 calls. Particularly when a police department is personnel-constrained, drones can save officers’ time while enhancing their situational awareness. For example, many burglar alarms are false alarms, maybe set off by moisture or an animal. Rather than sending a patrol officer to drive over to discover this, a drone can get there faster and determine if an officer is required at all. If the alarm is real, the drone can help officers understand the situation, the locations of any perpetrators, and how best to respond.

In January, a Skyfire AI drone was returning to base after responding to a false alarm when the police dispatcher asked us to reroute it to help locate a patrol officer. The officer had radioed a few minutes earlier that he had pulled over a suspicious vehicle and had not been heard from since. The officer had stopped where two major highways intersect in a complex cloverleaf, and dispatch was unsure exactly where they were located.

From the air, the drone rapidly located the officer and the driver of the vehicle he had pulled over, who it turned out had escaped from a local detention facility. Neither would have been visible from the road — they were fighting in a drainage ditch below the highway. Because of the complexity of the cloverleaf’s geometry, the watch officer (who coordinates police activities for the shift) later estimated it would have taken 5-7 minutes for an officer in a patrol car to find them.

From the aerial footage, it appeared that the officer still had his radio, but was losing the fight and unable to reach it to call for help. Further, it looked like the assailant might gain control of his service weapon and use it against him. This was a dire and dangerous situation.

Fortunately, because the drone had pinpointed the location of the officer and his assailant, dispatch was able to direct additional units to assist. The first arrived not in 5-7 minutes but in 45 seconds. Four more units arrived within minutes.

The officers were able to take control of the situation and apprehend the driver, resulting in an arrest and, more important, a safe outcome for the officer. Subsequently, the watch officer said we’d probably saved the officer’s life.

Democratic nations still have a lot of work to do on drone technology, and we must build this technology with guardrails to make sure we enhance civil liberties and human rights. But I am encouraged by the progress we’re making. In the aftermath of Hurricane Helene last year, Skyfire AI’s drones supported search-and-rescue operations under the direction of the North Carolina Office of Emergency Management, responding to specific requests to help locate missing persons and direct rescue assets (e.g., helicopters and boats) to their location, and was credited with saving 13 lives.

It’s not every day that AI directly saves someone's life. But as our technology advances, I think there will be more and more stories like these.

Keep building!

Andrew

A MESSAGE FROM DEEPLEARNING.AI

Learn to systematically evaluate, improve, and iterate on AI agents using structured assessments. In our short course “Evaluating AI Agents,” you’ll learn to add observability, choose the right evaluation methods, and run structured experiments to improve AI agent performance. Enroll for free

News

Grok 3 Scales Up

xAI’s new model family suggests that devoting more computation to training remains a viable path to building more capable AI.

What’s new: Elon Musk’s xAI published a video demonstration of Grok 3, a family of four large language models that includes reasoning and non-reasoning versions as well as full- and reduced-size models. Grok 3 is available to subscribers to X’s Premium+ ($40 monthly for users in the United States; the price varies by country) and will be part of a new subscription service called SuperGrok. The models currently take text input and produce text output, but the company plans to integrate audio input and output in coming weeks.

How it works: xAI has not yet disclosed details about Grok 3’s architecture, parameter counts, training datasets, or training methods. Here’s what we know so far:

- Grok 3’s processing budget for pretraining was at least 10 times that of its predecessor Grok 2. The processing infrastructure included 200,000 Nvidia H100 GPUs, double the number Meta used to train Llama 4.

- The team further trained Grok 3 to generate a chain of thought via reinforcement learning mainly on math and coding problems. The models show some reasoning tokens but obscure others, a strategy to stymie efforts to distill Grok 3’s knowledge.

- Similar to other reasoning models that generate a chain of thought, Grok 3 can spend more processing power at inference to get better results.

- Three modes enable Grok 3 to spend more processing power: (i) Think, which generates in-depth lines of reasoning; (ii) Big Brain, which is like Think, but with additional computation; and (iii) DeepSearch, an agent that can search the web and compile detailed reports, similar to Google’s Deep Research and OpenAI’s similarly named service.

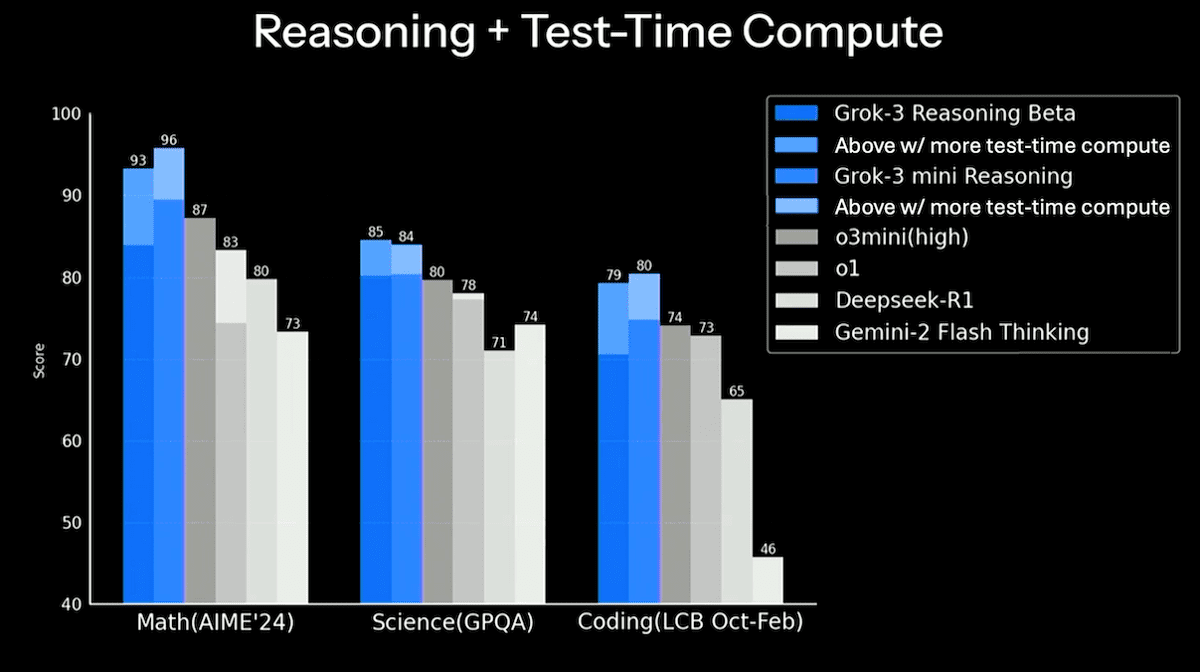

Results: The Grok 3 family outperformed leading models in math (AIME 2024), science (GPQA), and coding (LiveCodeBench).

- Non-reasoning models: Grok 3 and Grok 3 mini outperformed Google Gemini 2 Pro, DeepSeek-V3, Anthropic Claude 3.5 Sonnet, and OpenAI GPT-4o on all three datasets. On AIME 2024, Grok 3 achieved 52 percent accuracy, Grok 3 mini achieved 40 percent accuracy, and the next best model, DeepSeek-V3, achieved 39 percent accuracy.

- Reasoning models: Grok 3 Reasoning Beta and Grok 3 mini Reasoning (set to use a large but unspecified amount of computation at inference) outperformed OpenAI o3-mini (set to high “effort”), OpenAI o1, Deepseek-R1, and Google Gemini 2 Flash Thinking. For instance, on GPQA, Grok 3 Reasoning Beta achieved 85 percent accuracy, Grok 3 mini Reasoning achieved 84 percent, and the next best model, o3-mini, achieved 80 percent accuracy.

Behind the news: Reasoning models are pushing benchmark scores steadily upward, especially in challenging areas like math and coding. Grok 3, with its ability to reason over prompts, search the web, and compile detailed reports, arrives hot on the heels of OpenAI’s Deep Research and o3-mini and Google’s Gemini-2 Flash Thinking, which offer similar capabilities.

Why it matters: Grok 3 is a substantial achievement — especially for a company that’s less than two years old — and it pushes the state of the art forward by ample margins. But its significance may go farther. Research into scaling laws indicates that model performance scales with training. While xAI has not disclosed the amount of processing used to train Grok 3, the number of GPUs in its cluster suggests that the company applied a massive amount.

We’re thinking: Grok 3’s performance makes a case for both massive compute in pretraining and additional compute at inference. Running in its usual mode, Grok 3 mini Reasoning outperformed OpenAI o3-mini set at high effort on AIME 2024, GPQA, and LiveCodeBench. With an unspecified amount of additional compute, its performance on those benchmarks shot further upward by a substantial margin.

Mobile Apps to Order

Replit, an AI-driven integrated development environment, updated its mobile app to generate further mobile apps to order.

What’s new: Replit’s app, which previously generated simple Python programs, now generates iOS and Android apps and app templates that can be shared publicly. Mobile and web access to Replit’s in-house code generation models is free for up to three public applications. A Core plan ($25 per month, $180 per year) buys unlimited access and applications, code generation by Claude 3.5 Sonnet and OpenAI GPT-4o, and monthly credits for generated checkpoints.

How it works: The app and web tools are powered by Replit Agent, an AI coding assistant designed to help users write, debug, and deploy applications with little manual setup. Replit Agent is based on Claude 3.5 Sonnet and calls other specialized models. The agent framework is built on LangChain’s LangGraph. It breaks down development tasks into steps to be handled by specialized sub-agents.

- The mobile app includes three views in development or “create” mode, enabling users to build applications with natural language instructions in a chatbot interface, ask Replit’s chatbot questions, or preview applications in a built-in browser.

- A quick start panel also lets users import projects from GitHub, work using built-in templates, or build apps in specific coding languages.

- The system can plan new projects, create application architectures, write code, and deploy apps. Users can deploy completed apps to Replit’s infrastructure on Google Cloud without needing to configure hosting, databases, or runtime environments manually.

Behind the news: The incorporation of Replit Agent to Replit’s mobile app is a significant step for AI-driven IDEs. Competitors like Aider and Windsurf don’t offer mobile apps, and mobile apps from Cursor and Github provide chat but not mobile app development. Moreover, few coding agents can deploy apps to the cloud on the desktop or mobile.

Why it matters: Replit’s new mobile app produces working apps in minutes (although some early users have reported encountering bugs), and automatic deployment of apps to the cloud is a huge help. Yet it raises the stakes for developers to learn their craft and maintain a collaborative relationship with AI. While Replit’s web-based environment exposes the code, encouraging users to improve their skills, the mobile app hides much of its work below the surface. It brings AI closer to handling full software development cycles and adds urgency to questions about how to address the balance between automation and hands-on coding.

We’re thinking: AI continues to boost developer productivity and reduce the cost of software development, and the progress of Bolt, Cursor, Replit, Vercel, Windsurf, and others is exhilarating. We look forward to a day when, measured against the 2024 standard, every software engineer is a 10x engineer!

Musk Complicates OpenAI’s Plan

Elon Musk and a group of investors made an unsolicited bid to buy the assets of the nonprofit that controls OpenAI, complicating the AI powerhouse’s future plans.

What’s new: Musk submitted a $97.4 billion offer to acquire the assets of the nonprofit OpenAI Inc. CEO Sam Altman and the company’s board of directors swiftly rejected it, and Altman publicly mocked Musk by offering to buy Twitter for $9.74 billion (one-tenth of Musk’s bid and less than one-quarter the price he paid for the social network). OpenAI’s board reaffirmed its control over the company’s direction, signaling that it does not intend to cede governance to outside investors.

How it works: OpenAI was founded as a nonprofit in 2015, but since 2019 it has operated under an unusual structure in which the nonprofit board controls the for-profit entity that develops and commercializes AI models. This setup allows the board to maintain the company’s original mission — developing AI for the benefit of humanity — rather than solely maximizing shareholder value. However, driven by the need for massive investments in infrastructure and talent, OpenAI is considering a new for-profit structure that would allow external investors to own more of the company. The high offer by Musk — who, as CEO of xAI, competes with OpenAI — could interfere with that plan.

- The board has a legal duty to consider both OpenAI’s original mission and credible offers for its assets. While it rejected Musk’s bid, it must ensure that any restructuring aligns with its charter and does not unfairly disregard potential buyers.

- According to the current plan, the new for-profit entity would purchase the nonprofit’s assets. Musk’s bid suggests that the nonprofit’s assets alone are worth at least $97.4 billion, more than 60 percent of the entire organization’s valuation in late 2024. That could dramatically boost the cost of the planned restructuring.

- Some experts believe that Musk’s offer is less about acquiring OpenAI than driving up its valuation, which could dilute the equity of new investors in the new for-profit entity. By introducing a competitive bid, he may be attempting to make OpenAI’s restructuring more expensive or complicated.

- Musk has indicated he is willing to negotiate, effectively turning OpenAI’s transition into a bidding war. Altman stated that this could be a deliberate effort to “slow down” OpenAI and that he wished Musk would compete by building a better product instead.

Behind the news: Musk was one of OpenAI’s earliest investors, but he departed in 2018 after disagreements over direction and control of the organization. His bid follows a lawsuit against OpenAI, in which he claims the company abandoned its nonprofit mission in favor of profit. OpenAI said that Musk’s bid contradicts his legal claims and suggests that the lawsuit should be dismissed. Since then, Musk has stated that he would drop the lawsuit if OpenAI remains a nonprofit.

Why it matters: OpenAI is a premier AI company, and its activities affect virtually everyone in the field by supplying tools, technology, or inspiration. Musk’s xAI is a direct competitor, and his bid, whether it’s sincere or tactical, unsettles OpenAI’s plans. Even if OpenAI moves forward as planned, Musk’s actions likely will have made the process more expensive and potentially invite closer scrutiny of the company’s actions.

We’re thinking: There’s ample precedence for non-profits spinning out for-profit entities. For example, non-profit universities typically create intellectual property that forms the basis of for-profit startups. The university might retain a modest stake, and this is viewed as consistent with its non-profit mission. This isn’t a perfect analogy, since OpenAI does little besides operating its AI business, but we hope the company finds a path forward that allows it to serve users, rewards its employees for their contributions, and honors its non-profit charter.

World Powers Move to Lighten AI Regulation

The latest international AI summit exposed deep divisions between major world powers regarding AI regulations.

What’s new: While previous summits emphasized existential risks, the AI Action Summit in Paris marked a turning point. France and the European Union shifted away from strict regulatory measures and toward investment to compete with the United States and China. However, global consensus remained elusive: the U.S. and the United Kingdom refused to sign key agreements on global governance, military AI, and algorithmic bias. The U.S. in particular pushed back against global AI regulation, arguing that excessive restrictions could hinder economic growth and that international policies should focus on more immediate concerns.

How it works: Participating countries considered three policy statements that address AI’s impact on society, labor, and security. The first statement calls on each country to enact AI policies that would support economic development, environmental responsibility, and equitable access to technology. The second encourages safeguards to ensure that companies and nations distribute AI productivity gains fairly, protect workers’ rights, and prevent bias in hiring and management systems. The third advocates for restrictions on fully autonomous military systems and affirms the need for human oversight in warfare.

- The U.S. and UK declined to sign any of the three statements issued at the AI Action Summit. A U.K. government spokesperson said that the declaration lacked practical clarity on AI governance and did not sufficiently address national security concerns. Meanwhile, U.S. Vice President JD Vance criticized Europe’s “excessive regulation” of AI and warned against cooperation with China.

- Only 26 countries out of 60 agreed to the restrictions on military AI. They included Bulgaria, Chile, Greece, Italy, Malta, and Portugal among others.

- France pledged roughly $114 billion to AI research, startups, and infrastructure, while the EU announced a roughly $210 billion initiative aimed at strengthening Europe’s AI capabilities and technological self-sufficiency. France allocated 1 gigawatt of nuclear power to AI development, with 250 megawatts expected to come online by 2027.

- Despite the tight regulations proposed at past summits and passage of the relatively restrictive AI Act last year, the EU took a sharp turn toward reducing regulatory barriers to AI development. Officials emphasized the importance of reducing bureaucratic barriers to adoption of AI, noting that excessive regulation would slow Europe’s progress in building competitive AI systems and supporting innovative applications.

- Shortly after the summit, the European Commission withdrew a proposed law (the so-called “liability directive”) that would have made it easier to sue companies for vaguely defined AI-related harms. The decision followed criticism by industry leaders and politicians, including Vance, who argued that excessive regulation could hamper investment in AI and hinder Europe’s ability to compete with the U.S. and China in AI development while failing to make people safer.

Behind the news: The Paris summit follows previous gatherings of world leaders to discuss AI, including the initial AI Safety Summit at Bletchley Park and the AI Seoul Summit and AI Global Forum. At these summits, governments and companies agreed broadly to address AI risks but avoided binding regulations. Nonetheless, divisions over AI governance have widened in the wake of rising geopolitical competition and the emergence of high-performance open weights models like DeepSeek-R1.

Why it matters: The Paris summit marks a major shift in global AI policy. The EU, once an ardent proponent of AI regulation, backed away from its strictest proposals. At the same time, doomsayers have lost influence, and officials are turning their attention to immediate concerns like economic growth, security, misuse, and bias. These moves make way for AI to do great good in the world, even as they contribute to uncertainty about how AI will be governed.

We’re thinking: Governments are shifting their focus away from unrealistic risks and toward practical strategies for guiding AI development. We look forward to clear policies that encourage innovation while addressing real-world challenges.