Dear friends,

Around the world, students are graduating. If you’re one of them, or if someone close to you is graduating, congratulations!!!

My family swapped pictures on WhatsApp recently and came across this one, which was taken when I graduated from Carnegie Mellon (I’m standing in the middle). I was privileged to have already worked on a few AI projects thanks to my mentors in college, including Michael Kearns, Andrew McCallum, Andrew Moore and Tom Mitchell. But now, looking back, I reflect on how clueless I was and how little I knew about AI, business, people, and the world in general.

To this day, I don’t feel particularly clued in. Every year or so, I look back and marvel at how clueless I was a year ago, and I’m pretty sure I’ll feel the same way a year from now. This helps me to act with humility and avoid expressing unwarranted certainty.

If you’re graduating:

- Congratulations on all you’ve learned!

- I hope that you’ll have the pleasure of learning so much in each coming year that you, too, will marvel at how little you used to know.

- I also hope you’ll treasure the people around you. Of the people in the picture, I still zoom regularly with my parents (left) and brother (right), but my two grandparents who attended my commencement ceremony are no longer with us.

If you’ve already graduated, I hope you’ll take joy in the success of those who are coming up behind you.

Love to you all and keep learning,

Andrew

News

Borderline AI

U.S. immigration officials expect over 2 million migrants to reach the country’s southern border by the end of the year. They’re counting on face recognition to streamline processing of those who seek asylum.

What’s new: The U.S. Customs and Border Protection agency developed an app called CBP One that matches asylum seekers with existing applications, Los Angeles Times reported.

How it works: Would-be immigrants who feel their lives are in danger in their home country — — most of whom come from violence-wracked parts of Mexico and Central America — can apply for asylum status in the U.S. Some 70,000 who have applied remain in Mexico awaiting a decision. CBP One is designed to expedite acceptance or rejection when those people return to the border.

- Asylum seekers can submit a photo portrait to check the status of their application: open or closed.

- If their case remains open, they can use the app to arrange a Covid-19 screening, find an appropriate point of entry, and request permission to enter.

- The app has helped officials process more than 11,000 cases in recent weeks.

Yes, but: Privacy experts are concerned about data collection and surveillance of migrants who have little choice but to use the app. Confidentiality is also a worry, since hackers stole 180,000 images from a border patrol database in 2018.

Behind the news: Launched in October, the app initially was limited to cargo shippers, pleasure boaters, and non-immigrant travelers. In May, however, the number of migrants surged, and the agency received emergency approval to bypass privacy laws and use the app to process applications to enter the country.

Why it matters: Many migrants who arrive at the southern U.S. border are fleeing poverty, gang violence, political instability, and climate change-induced environmental crises. AI could help those in danger find refuge more quickly.

We’re thinking: Immigration is hugely beneficial to the U.S., and AI can help scale the process. But it’s crucial that the policies we scale are fair, transparent, and astute rather than biased or xenophobic.

3D Scene Synthesis for the Real World

Researchers have used neural networks to generate novel views of a 3D scene based on existing pictures plus the positions and angles of the cameras that took them. In practice, though, you may not know the precise camera positions and angles, since location sensors may be unavailable or miscalibrated. A new method synthesizes novel perspectives based on existing views alone.

What’s new: Chen-Hsuan Lin led researchers at Carnegie Mellon University, Massachusetts Institute of Technology, and University of Adelaide in developing the archly named Bundle-Adjusting Neural Radiance Fields (BARF), a technique that generates new 3D views from images of a scene without requiring further information.

Key insight: The earlier method called NeRF requires camera positions and angles to find values that feed a neural network. Those variables can be represented by a learnable vector, and backpropagation can update it as well as the network’s weights.

How it works: Like NeRF, BARF generates views of a scene by sampling points along rays that extend from the camera through each pixel. It uses a vanilla neural network to compute the color and transparency of each point based on the point’s position and the ray’s direction. To determine the color of a given pixel, it combines the color and transparency of all points along the associated ray. Unlike NeRF, BARF’s loss function is designed to learn camera positions and angles, and it uses a training schedule to learn camera viewpoints before pixel colors.

- As input, BARF takes images plus their viewpoint vectors. Given a novel viewpoint, it learns to minimize the difference between the predicted and ground-truth color of each pixel.

- Points along separate rays that are close to one another have similar coordinates. The similarity makes it difficult to distinguish details and object boundaries in such areas. To work around this issue, BARF (like NeRF) represents points as fixed position vectors such that a small change in a point’s location causes a large change in its position vector.

- This positional encoding helps the system reproduce scene details, but it inhibits learning of viewpoint vectors, since a large shift in the representation of nearby points causes the learned camera viewpoint to swing wildly without converging. To solve this problem, BARF zeroes out most of each position vector at the start of training and fills it in progressively as training progresses. Consequently, the network learns the correct camera perspective earlier in training and how to paint details in the scene later.

Results: The researchers compared BARF to NeRF, measuring their ability to generate a novel view based on several views of an everyday scene, where the viewpoints were unknown to BARF and known to NeRF. BARF achieved 21.96 competitive peak signal-to-noise ratio, a measure of the difference between the generated and actual images (higher is better). NeRF achieved 23.25 competitive peak signal-to-noise ratio.

Why it matters: Data collected in the wild rarely are perfect, and bad sensors are one of many reasons why. BARF is part of a new generation of models that don’t assume accurate sensor input, spurring hopes of systems that generalize to real-world conditions.

We’re thinking: In language processing, ELMo kicked off a fad for naming algorithms after Sesame Street characters. Here’s hoping this work doesn’t inspire its own run of names.

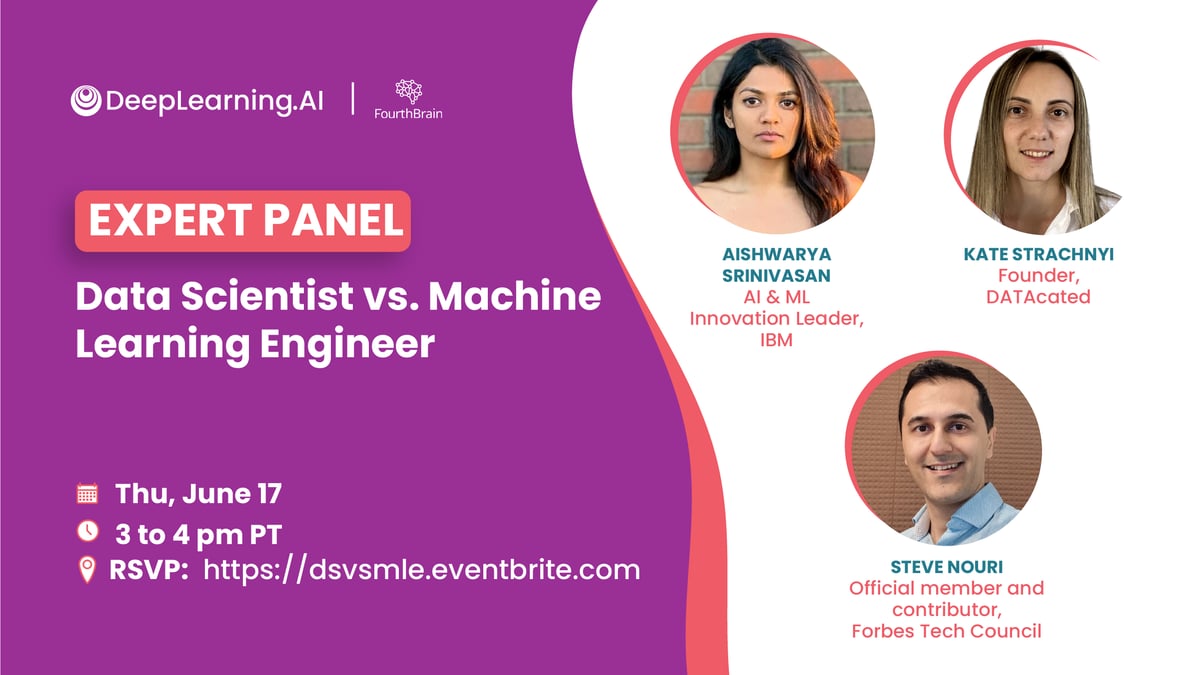

A MESSAGE FROM DEEPLEARNING.AI

Join us on June 17, 2021, to discuss the difference between data scientists and machine learning engineers and how to transition from one role to the other. This Expert Panel is presented by DeepLearning.ai and FourthBrain. Sign up here

Every Problem Looks Like a Nail

Robots are brushing their way into the beauty market.

What’s new: A trio of companies is developing automated nail-painting devices that integrate robotics and computer vision, The New York Times reported.

How it works: Users select a color and place a hand or finger into a slot in a toaster-sized machine. The system scans the fingertips, and an automated paint dispenser — in some cases, a mechanical arm tipped by a brush — coats each nail. These machines update earlier nail-decorating gadgets that, say, applied decals without using AI.

- Clockwork aims to install its machines in offices and retail stores. The company recently opened a storefront in San Francisco.

- Nimble and Coral aim their devices at home users.

- All three companies are still tweaking their products ahead of official launches.

Behind the news: The beauty industry has embraced a variety of AI techniques.

- Makeup wearers can upload a portrait to Estée Lauder and L’Oreal, which use face recognition to determine color combinations that match or highlight a person’s skin tone.

- Neutrogena’s Skin360 scans a user’s face to identify blemishes and provide targeted skin-care advice.

- Photo-filtering apps like Meitu automatically touch up users’ selfies.

Why it matters: Americans spent $8.3 billion on nail care last year. Automated systems could appeal to people who are looking for a fast makeover as well as those who want to continue social distancing without foregoing manicures. But such systems also could also displace workers who already contend with low wages.

We’re thinking: Paint your nails or don’t, but everyone who writes code should take good care of their hands.

Irresponsible AI

Few companies that use AI understand the ethical issues it raises.

What’s new: While many companies are ramping up investments in AI, few look for and correct social biases in their models, according to a report by the credit-scoring company Fico. The report surveyed 100 C-level executives in data, analytics, and AI departments at companies that bring in revenue of $100 million or more annually.

What they found: Nearly half of respondents said their company’s investment in AI had grown in the last 12 months. But there was no corresponding rise in efforts to make sure AI was ethical, responsible, and free of bias.

- Over 60 percent of respondents reported that their company’s executives had a poor or partial understanding of AI ethics. Even higher percentages found limited understanding among customers, board members, and shareholders.

- 21 percent had prioritized AI ethics in the past year. Another 30 percent said they would do so this year. Still, 73 percent reported difficulty getting buy-in from colleagues on ethical AI goals.

- There is little consensus on corporate responsibility with regard to AI. Some respondents said they had no responsibility beyond legal and regulatory compliance, while others supported standards of fairness and transparency.

- Around half said they evaluated data and models for bias. 11 percent hired outside evaluators to test models for bias.

- 51 percent of respondents did not monitor models after deployment.

Behind the news: This is Fico’s second annual report, and it shows some improvement over the previous survey: Last year, 67 percent of respondents said they did not monitor systems after deployment.

Why it matters: Never mind technical issues — taking the survey’s results at face value, a substantial percentage of large companies aren’t ready for AI transformation on an ethical level. Businesses that pursue AI without paying attention to ethical pitfalls run the risk of alienating customers and violating laws.

We’re thinking: Companies that pay attention to ethics — in AI and elsewhere — will reap rewards in the form of better products, happier customers, and greater fairness and justice in the world.

A MESSAGE FROM DEEPLEARNING.AI

.png?width=1200&upscale=true&name=Specialization%20Name%20(1).png)

Check out Practical Data Science, our new program in partnership with Amazon Web Services (AWS)! This specialization will help you develop practical skills to deploy projects and overcome challenges using Amazon SageMaker. Enroll now