Dear friends,

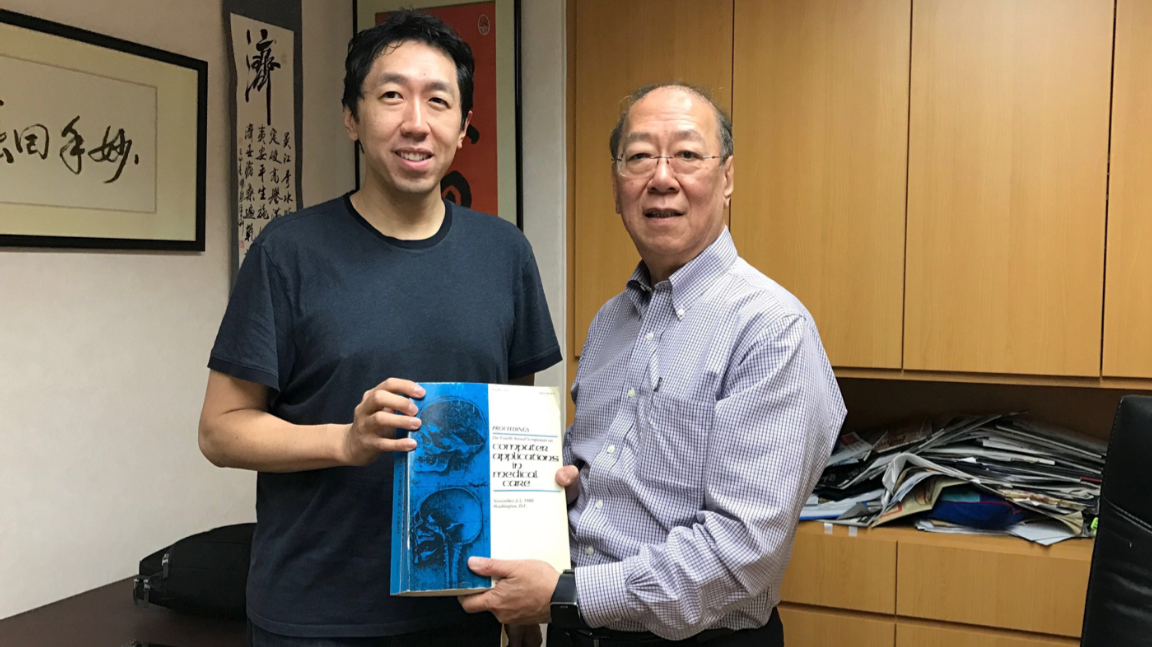

Healthcare is one of many sectors being transformed by AI. I have a personal interest in it, since my father worked on machine learning for diagnosis of liver diseases almost 40 years ago. It’s thanks partly to this work that I learned about AI from an early age. He recently gave me his hard copy of 1980 conference proceedings containing a paper he wrote on the subject, and it’s one of my most treasured possessions.

Last week, I spoke on AI at a Radiological Society of North America course in San Francisco. Many radiologists wonder how machine learning will affect their job, but I saw widespread excitement about ML’s potential as well as a belief that it will improve healthcare in the near term.

Why aren’t more AI-radiology systems already deployed? I think the top three reasons are:

- The Top 10 Diseases problem: Today ML may be able to diagnose 10 conditions reliably, but a radiologist could diagnose any of 200. ML still struggles with small data for diseases 11 through 200.

- Generalizability: An algorithm that works well on training and test data sets drawn from the same distribution — which lets you publish a paper — may not work well when the real world gives you very different data owing to, say, a different x-ray machine, patient type, or imaging protocol. Even if ML outperforms human radiologists in narrow conditions, humans generalize to novel test sets much better than current ML.

- Safety and regulations. After deploying a system, how do we ensure reasonable performance? Are we convinced these systems are really safe to use, especially since the world gives us data different from our test sets? What are appropriate regulations to ensure safety without discouraging innovation?

Because of these issues, I think collaboration between radiologists and AI will drive deployment more quickly than pure AI automation. Once someone gets this working safely and reliably in one hospital, I hope it will spread like wildfire across the world. There is technical and non-technical work to be done, but as a community we will get there, and this will help countless patients.

Keep learning,

Andrew

News

10-Second Crystal Ball

Automated image recognition raises an ethical challenge: Can we take advantage of this useful technology without impinging on personal privacy and autonomy? That question becomes more acute with new research that uses imagery to predict human actions.

What’s new: Researchers combined several video processing models to create a state-of-the-art pipeline for predicting not only where pedestrians will go, but also what they’ll do next, within a time horizon of several seconds. Predicting actions, they found, improves the system’s trajectory predictions. Watch a video of the system in action here.

How it works: The architecture called Next predicts paths using estimations of scene variables like people, objects, and actions. Modules devoted to person behavior and person interaction create a feature tensor unique to each person. The tensor feeds into modules for trajectory generation, activity prediction, and activity location prediction.

- The person behavior module detects people in a scene and embeds changes in their appearance and movement in the feature tensor.

- The person interaction module describes the scene layout and relationships between every person-and-object pair in the feature tensor.

- The trajectory generator uses an LSTM encoder/decoder to predict future locations of each person.

- The activity prediction module computes likely actions for every person, at each location, in each time step. This auxiliary task mitigates errors caused by inaccurately predicted trajectories.

- The activity location prediction module predicts a region to obtain a final action from the activity prediction module. A regression to the final position within that region forces the trajectory generator and activity prediction module to agree.

Junwei Liang and his team at Carnegie Mellon, Google, and Stanford trained Next using a multi-task loss function, which combines errors in predicted trajectories, activity location versus trajectory loss, and activity classification. The loss is summed over all people in the scene.

Why it matters: Beyond its superiority at predicting where people are heading, Next is the first published model that predicts both peoples’ trajectories and their activities. Prior models predicted actions over less than half the time horizon and were less accurate, while Next seems to approach a human’s predictive capability.

We’re thinking: The ability to anticipate human actions could lead to proactive robot assistants and life-saving safety systems. But it also has obvious — and potentially troubling — applications in surveillance and law enforcement. Pushing the boundaries of action prediction is bound to raise as many questions as it answers.

Robots to the Rescue

Humanoid robots are notoriously ungainly, but nimble machines of roughly human size and shape could prove critical in disaster areas, where danger is high but architecture, controls, and tools are designed for homo sapiens. A new control system could help them move more nimbly through difficult environments.

What’s new: Researchers at MIT are working on a two-part system. A humanoid robot called Hermes is lightweight but strong, with a shatter-resistant, carbon-fiber skeleton and high-torque actuators. The other part is an interactive control system that not only lets the operator move the robot, but lets the robot move the operator, according to IEEE Spectrum.

How it works: Hermes has some autonomy, but the telerobotic system is responsible for its gross movement. The key is a feedback loop between operator and robot that’s refreshed 1,000 times per second:

- The operator stands on a platform that measures surface forces and transmits them to the Hermes.

- The operator’s limbs and waist likewise are linked to the robot.

- Motors at some linkages apply haptic forces and torques to the operator’s body.

- So when Hermes, say, loses its balance, the control system shoves the operator, who is forced to compensate and thus right the robot.

- Goggles track the operator’s eyes. When the operator fixes his or her gaze — a sign of mental concentration — the robot’s autonomy kicks in to lighten the cognitive load.

Behind the news: In the 2011 nuclear meltdown at Fukushima Daiichi, high radiation levels impeded workers from taking action. That event dramatized the urgent need for robots that can respond to disasters. Not all disaster-response bots must have humanoid form, but it helps in tasks like swinging an axe, operating a fire extinguisher, and throwing switches — tasks Hermes is designed to handle.

What’s next: Hermes suffers somewhat from latency in the feedback system. The researchers are looking to 5G cellular tech to make the system more responsive.

Fake News Detector

OpenAI hasn't released the full version of its GPT-2 language model, fearing the system would create a dark tide of fake news masquerading as real reporting. Now researchers offer a way to detect such computer-generated fancies — but their approach essentially requires distributing language models that can generate made-up news.

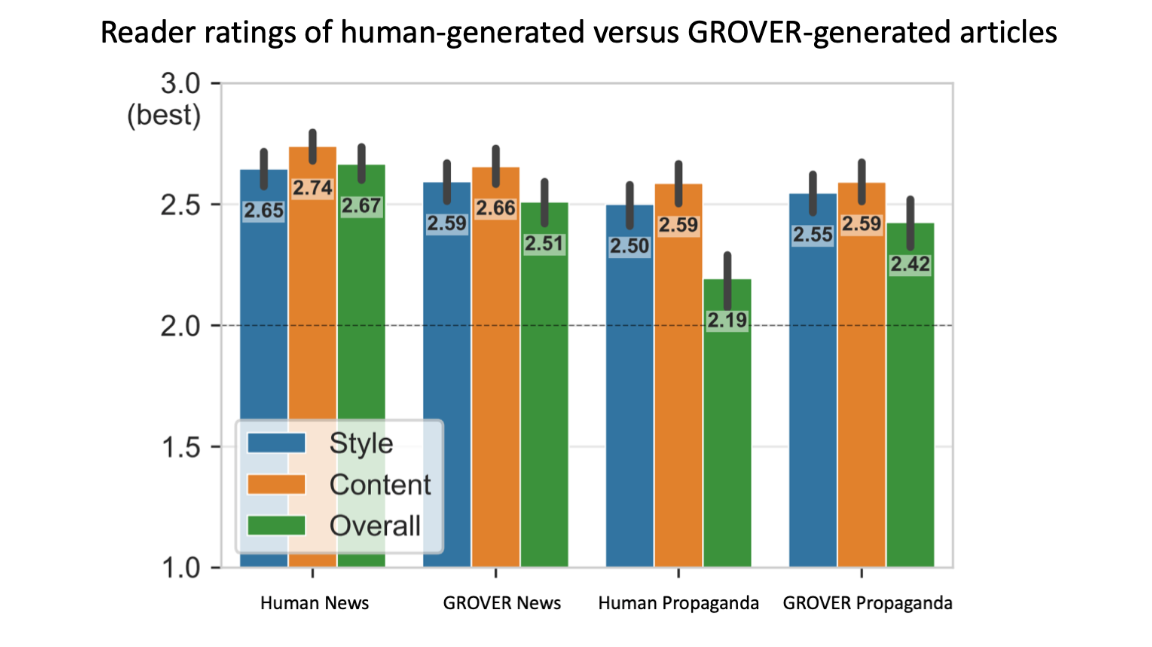

What’s new: Researchers propose a framework for building verifiers, or classifiers that discriminate between human- and machine-authored articles, based on models that generate text. They introduce the fake-news generator GROVER — continuing the recent vogue for naming language models after Muppets — along with its complementary verifier. The new model is so good, human judges rated its Infowars-style propaganda output more credible than examples produced by human writers. You can try out a limited version here.

How it works: Rowan Zellers and his University of Washington collaborators constructed verifiers by copying text-generator architectures up to their output layer and then substituting a classification layer. They initialized the verifiers using transfer learning before training on generated text. Key insights:

- A verifier can do well at spotting the output of generators of different architectures if it has more parameters and training examples.

- The more examples a verifier has from a given generator, the more accurately it can classify articles from that generator.

- The verifier based on GROVER achieved more than 90 percent accuracy identifying GROVER articles. Verifiers that weren’t based on GROVER achieved 78 percent accuracy identifying GROVER's output.

Why it matters: Systems like GROVER threaten to flood the world with highly believable hoaxes. Automated countermeasures are the only viable defense. Zellers argues in favor of releasing newly invented language models, since they're tantamount to verifiers anyway.

Yes, but: The fact that larger models can fool verifiers suggests that we’re in for a fake-news arms race in which dedicated mischief makers continually up the ante.

We’re thinking: While Zellers' method can recognize machine authorship, the real goal is an algorithm that distinguishes fact from fiction. Until someone invents that, hoaxers — both digital and flesh-and-blood — are likely to remain one step ahead.

A MESSAGE FROM DEEPLEARNING.AI

Still debating whether to get into machine learning? Check out the techniques you'll learn and projects you'll build in the Deep Learning Specialization. Enroll Now

Probing Junk DNA

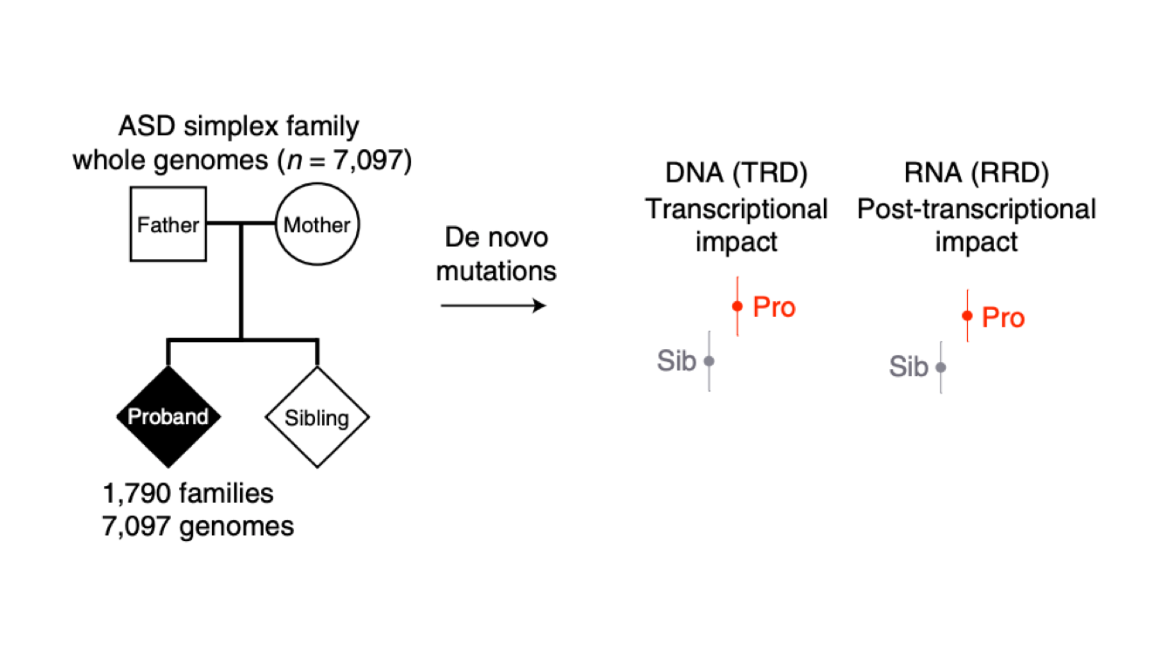

Deep learning helped geneticists find mutations associated with autism in vast regions of the human genome commonly known as junk DNA.

What’s new: Researchers examined DNA of people with autism. They used a neural network to find mutations in noncoding regions; that is, sequences that don’t hold instructions for producing particular proteins, but regulate how proteins interact. It’s the first time noncoding DNA has been implicated in the condition.

How they did it: Researcher Jian Zhou and his colleagues at Princeton and elsewhere analyzed the genomes of 1,790 families.

- In each family, autism was limited to one child, indicating that the condition was not inherited but caused by random mutation.

- A neural network scanned the genome to predict which DNA sequences affect protein interactions that are known to regulate gene expression. It also predicted whether a mutation in those sequences could interfere with such interactions.

- The team compared the impact of mutations in autistic individuals with that of the mutations in their unaffected siblings, finding that the autistic individuals had a greater burden of high-impact mutations.

- The team found that these high-impact mutations influence brain function.

- They tested the predicted effect of these mutations in cells and found the gene expression was altered as predicted.

The work was published in Nature Genetics.

Why it matters: Although the results didn’t reveal the causes of autism, they did point to mutations in noncoding DNA associated with the condition. That information could lead to a better understanding of causes, and it could help scientists differentiate various types of autism. Moreover, the same approach could be applied to any disease, illuminating the role of noncoding DNA in heart diseases and neurological conditions where, like autism, direct genetic causes haven’t been identified.

We’re thinking: The human genome is immense and complex, and traditional lab-based approaches are too slow and cumbersome to decipher the activities of its 3 billion DNA base pairs. AI can narrow the search space, and Zhou’s work shows a hint of its potential.

Drones Against Climate Change

In self-driving cars, the laser-radar hybrid known as lidar senses surrounding objects to determine the path of a vehicle. In drones, it’s being used to see through forests to evaluate the impact of climate change on the ground.

What’s new: Drones equipped with lidar are flying over forests in Scotland. Unlike satellite photos, lidar can penetrate the canopy, which remains green throughout the year, to see the forest floor. The resulting imagery enables scientists to track encroachment of nonnative plants that are killing trees, as well as soil degradation, drought, and other conditions, according to BBC News.

How it works: Ecometrica, which calls itself a downstream space information company, operates the drones as part of a UK-funded program to evaluate threatened forests around the world.

- Lidar can’t see through leaves, but laser pulses can find their way through gaps, bounce off the ground, and return, providing a view of ground-level conditions.

- Ecometrica melds the lidar signal with satellite imagery, GPS coordinates, and other information to create a three-dimensional map of the forest floor.

- The company uses machine learning algorithms that detect changes like deforestation. It has found that traditional ML techniques such as random forests tend to be fast and cost-effective.

Why it matters: Scotland’s forests, which once covered much of the country, have dwindled to 4 percent of total land area. Climate change is spurring rhododendron, a flowering shrub introduced in the 1700s, to move into the remnant forests. There, it spreads fungal diseases and toxic leaf litter that damage the trees. The drone imagery will help prioritize trouble spots for eradication efforts.

Takeaway: Beyond their commercial uses, emerging technologies including drones, lidar, and AI hold hope of solving dire environmental problems. Combining them effectively is an important part of the solution as climate change sets in.