Dear friends,

I think AI agent workflows will drive massive AI progress this year — perhaps even more than the next generation of foundation models. This is an important trend, and I urge everyone who works in AI to pay attention to it.

Today, we mostly use LLMs in zero-shot mode, prompting a model to generate final output token by token without revising its work. This is akin to asking someone to compose an essay from start to finish, typing straight through with no backspacing allowed, and expecting a high-quality result. Despite the difficulty, LLMs do amazingly well at this task!

With an agent workflow, however, we can ask the LLM to iterate over a document many times. For example, it might take a sequence of steps such as:

- Plan an outline.

- Decide what, if any, web searches are needed to gather more information.

- Write a first draft.

- Read over the first draft to spot unjustified arguments or extraneous information.

- Revise the draft taking into account any weaknesses spotted.

- And so on.

This iterative process is critical for most human writers to write good text. With AI, such an iterative workflow yields much better results than writing in a single pass.

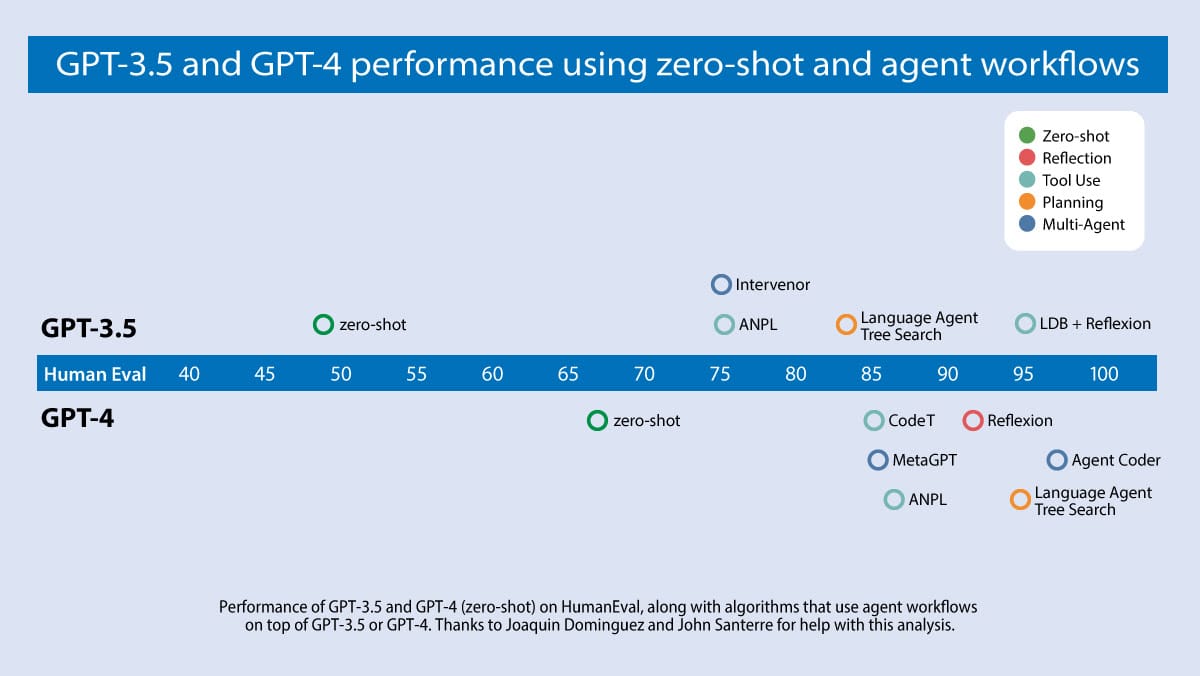

Devin’s splashy demo recently received a lot of social media buzz. My team has been closely following the evolution of AI that writes code. We analyzed results from a number of research teams, focusing on an algorithm’s ability to do well on the widely used HumanEval coding benchmark. You can see our findings in the diagram below.

GPT-3.5 (zero shot) was 48.1% correct. GPT-4 (zero shot) does better at 67.0%. However, the improvement from GPT-3.5 to GPT-4 is dwarfed by incorporating an iterative agent workflow. Indeed, wrapped in an agent loop, GPT-3.5 achieves up to 95.1%.

Open source agent tools and the academic literature on agents are proliferating, making this an exciting time but also a confusing one. To help put this work into perspective, I’d like to share a framework for categorizing design patterns for building agents. My team AI Fund is successfully using these patterns in many applications, and I hope you find them useful.

- Reflection: The LLM examines its own work to come up with ways to improve it.

- Tool use: The LLM is given tools such as web search, code execution, or any other function to help it gather information, take action, or process data.

- Planning: The LLM comes up with, and executes, a multistep plan to achieve a goal (for example, writing an outline for an essay, then doing online research, then writing a draft, and so on).

- Multi-agent collaboration: More than one AI agent work together, splitting up tasks and discussing and debating ideas, to come up with better solutions than a single agent would.

Next week, I’ll elaborate on these design patterns and offer suggested readings for each.

Keep learning!

Andrew

P.S. Build an optimized large language model (LLM) inference system from the ground up in our new short course “Efficiently Serving LLMs,” taught by Predibase CTO Travis Addair.

- Learn techniques like KV caching, continuous batching, and quantization to speed things up and optimize memory usage.

- Benchmark LLM optimizations to explore the trade-offs between latency and serving many users at once.

- Use low-rank adaptation (LoRA) to serve hundreds of custom fine-tuned models on a single device efficiently.

News

Conversational Robots

Robots equipped with large language models are asking their human overseers for help.

What's new: Andrew Sohn and colleagues at Covariant launched RFM-1, a model that enables robots to respond to instructions, answer questions about what they see, and request further instructions. The model is available to Covariant customers.

How it works: RFM-1 is a transformer that comprises 8 billion parameters. The team started with a pretrained large language model and further trained it, given text, images, videos, robot actions, and/or robot sensor readings, to predict the next token of any of those types. Images and videos are limited to 512x512 pixels and 5 frames per second.

- Proprietary models embed non-language inputs.

- RFM-1 responds conversationally to text and/or image inputs. Given an image of a bin filled with fruit and the question “Are there any fruits in the bin?” the model can respond yes or no. If yes, it can answer follow-up questions about the fruit’s type, color, and so on.

- Given a robotic instruction, the model generates tokens that represent a combination of high-level actions and low-level commands. For example, asked to “pick all the red apples,” it generates the tokens required to pluck the apples from a bin.

- If the robot is unable to fulfill an instruction, the model can ask for further direction. For instance, in one demonstration, it asks, “I cannot get a good grasp. Do you have any suggestions?” When the operator responds, “move 2 cm from the top of the object and knock it over gently,” the robot knocks over the item and automatically finds a new way to pick it up.

- RFM-1 can predict future video frames. For example, if the model is instructed to remove a particular item from a bin, prior to removing the item, it can generate an image of the bin with the item missing.

Behind the news: Covariant’s announcement follows a wave of robotics research in recent years that enables robots to take action in response to text instructions.

Why it matters: Giving robots the ability to respond to natural language input not only makes them easier to control, it also enables them to interact with humans in new ways that are surprising and useful. In addition, operators can change how the robots work by issuing text instructions rather than programming new actions from scratch.

We're thinking: Many people fear that robots will make humans obsolete. Without downplaying such worries, Covariant’s conversational robot illustrates one way in which robots can work alongside humans without replacing them.

Some Models Pose Security Risk

Security researchers sounded the alarm about holes in Hugging Face’s platform.

What’s new: Models in the Hugging Face open source AI repository can attack users’ devices, according to cybersecurity experts at JFrog, a software firm. Meanwhile, a different team discovered a vulnerability in one of Hugging Face’s own security features.

Compromised uploads: JFrog developed scanned models on Hugging Face for known exploits. They flagged around 100 worrisome models. Flagged models may have been uploaded by other security researchers but pose hazards nonetheless, JFrog said.

- Around 50 percent of the flagged models were capable of hijacking objects on users’ devices. Around 20 percent opened a reverse shell on users’ devices, which theoretically allows an attacker to access them remotely. 95 percent of the flagged models were built using PyTorch, and the remainder were based on TensorFlow Keras.

- For instance, a model called goober2 (since deleted) took advantage of a vulnerability in Pickle, a Python module that serializes objects by a list or array into a byte stream and back again. The model, which had been uploaded to Hugging Face by a user named baller23, used Pickle to insert code into PyTorch that attempted to start a reverse shell connection to a remote IP address.

- The apparent origin of goober2 and many other flagged models — the South Korean research network KREONET — suggests that it may be a product of security researchers.

Malicious mimicry: Separately, HiddenLayer, a security startup, demonstrated a way to compromise Safetensors, an alternative to Pickle that stores data arrays more securely. The researchers built a malicious PyTorch model that enabled them to mimic the Safetensors conversion bot. In this way, an attacker could send pull requests to any model that gives security clearance to the Safetensors bot, making it possible to execute arbitrary code; view all repositories, model weights, and other data; and replace users’ models.

Behind the News: Hugging Face implements a variety of security measures. In most cases, it flags potential issues but does not remove the model from the site; users download at their own risk. Typically, security issues on the site arise when users inadvertently make their own information available. For instance, in December 2023, Lasso Security discovered available API tokens that afforded access to over 600 accounts belonging to organizations like Google, Meta, and Microsoft.

Why it matters: As the AI community grows, AI developers and users become more attractive targets for malicious attacks. Security teams have discovered vulnerabilities in popular platforms, obscure models, and essential modules like Safetensors.

We’re thinking: Security is a top priority whenever private data is concerned, but the time is fast approaching when AI platforms, developers, and users must harden their models, as well as their data, against attacks.

NEW FROM DEEPLEARNING.AI

In our new short course “Efficiently Serving Large Language Models,” you’ll pop the hood on large language model inference servers. Learn how to increase the performance and efficiency of your LLM-powered applications! Enroll today

Deepfakes Become Politics as Usual

Synthetic depictions of politicians are taking center stage as the world’s biggest democratic election kicks off.

What’s new: India’s political parties have embraced AI-generated campaign messages ahead of the country’s parliamentary elections, which will take place in April and May, Al Jazeera reported.

How it works: Prime Minister Narendra Modi, head of the ruling Bharatiya Janata Party (BJP), helped pioneer the use of AI in campaign videos in 2020. They’ve become common in recent state elections.

- The party that governs the state of Tamil Nadu released videos that feature an AI-generated likeness of a leader who died in 2018. The Indian media firm Muonium built the model by training a voice model on the politician’s speeches from the 1990s.

- In a December state election, the Congress party circulated a video in which its chief opponent, the leader of a rival BRS party, tells voters to choose Congress. BRS said the video was deepfaked.

- A startup called The Indian Deepfaker has cloned candidates’ voices and produced younger-looking images of them for several parties. In November, the firm cloned the voice of a state leader of the Congress party to send personalized audio messages to potential voters. It rejected more than 50 requests to alter video and audio to target political opponents, including calls to create pornographic material.

Meanwhile, in Pakistan: Neighboring Pakistan was deluged with deepfakes in the run-up to its early-February election. Former prime minister Imran Khan, who has been imprisoned on controversial charges since last year, communicated with followers via a clearly marked AI-generated likeness. However, he found himself victimized by deepfakery when an AI-generated likeness of him, source unknown, urged his followers to boycott the polls.

Behind the news: Deepfakes have proliferated in India in the absence of comprehensive laws or regulations that govern them. Instead of regulating them directly, government officials have pressured social media operators like Google and Meta to moderate them.

What they’re saying: “Manipulating voters by AI is not being considered a sin by any party,” an anonymous Indian political consultant told Al Jazeera. “It is just a part of the campaign strategy.”

Why it matters: Political deepfakes are quickly becoming a global phenomenon. Parties from Argentina, the United States, and New Zealand have distributed AI-generated imagery or video. But the sheer scale of India’s national election — in which more than 900 million people are eligible to vote — has made it an active laboratory for synthetic political messages.

We’re thinking: Synthetic media has legitimate political uses, especially in a highly multilingual country like India, where it can enable politicians to communicate with the public in a variety of languages and dialects. But unscrupulous parties can also use it to sow misinformation and undermine trust in politicians and media. Regulations are needed to place guardrails around deepfakes in politics. Requiring identification of generated campaign messages would be a good start.

Cutting the Cost of Pretrained Models

Research aims to help users select large language models that minimize expenses while maintaining quality.

What's new: Lingjiao Chen, Matei Zaharia, and James Zou at Stanford proposed FrugalGPT, a cost-saving method that calls pretrained large language models (LLMs) sequentially, from least to most expensive, and stops when one provides a satisfactory answer.

Key insight: In many applications, a less-expensive LLM can produce satisfactory output most of the time. However, a more-expensive LLM may produce satisfactory output more consistently. Thus, using multiple models selectively can save substantially on processing costs. If we arrange LLMs from least to most expensive, we can start with the least expensive one. A separate model can evaluate its output, and if it’s unsatisfactory, another algorithm can automatically call a more expensive LLM, and so on.

How it works: The authors used a suite of 12 commercial LLMs, a model that evaluated their output, and an algorithm that selected and ordered them. At the time, the LLMs’ costs (which are subject to change) spanned two orders of magnitude: GPT-4 cost $30/$60 per 1 million tokens of input/output, while GPT-J hosted by Textsynth cost $0.20/$5 per 10 million tokens of input/output.

- To classify an LLM’s output as satisfactory or unsatisfactory, the authors fine-tuned separate DistilBERTs on a diverse selection of datasets: one that paired news headlines and subsequent changes in the price of gold, another that labeled excerpts from court documents according to whether they rejected a legal precedent, and a third dataset of questions and answers. Given an input/output pair (such as a question and answer), they fine-tuned DistilBERT to produce a high score if the output was correct and low score if it wasn’t. The output was deemed satisfactory if its score exceeded a threshold.

- A custom algorithm (which the authors don’t describe in detail) learned to choose three LLMs and put them in order. For each dataset, it maximized the percentage of times a sequence of three LLMs generated the correct output within a set budget.

- The first LLM received an input. If its output was unsatisfactory, the second LLM received the input. If the second LLM’s output was unsatisfactory, the third LLM received the input.

Results: For each of the three datasets, the authors found the accuracy of each LLM. Then they found the cost for FrugalGPT to match that accuracy. Relative to the most accurate LLM, FrugalGPT saved 98.3 percent, 73.3 percent, and 59.2 percent, respectively.

Why it matters: Many teams choose a single model to balance cost and quality (and perhaps speed). This approach offers a way to save money without sacrificing performance.

We're thinking: Not all queries require a GPT-4-class model. Now we can pick the right model for the right prompt.

Data Points

Find more AI news of the week in Data Points, including:

◆ AI takes over hockey and racing

◆ An advanced brain surgery assistant

◆ Google’s measures for the Indian General Election

◆ OpenAI’s licensing agreement with Le Monde and Prisa